During the last few decades, technology has radically altered the distribution and assimilation of knowledge (Jenkins 2008; Tyner 1994). Different media channels offer different opportunities and challenges for learning (Norman 1988). A migration to Internet-based platforms poses problems for educators and students. There is an overwhelming amount of content available to learners, but the reliability of Internet sources is highly variable. Without proper curation, the burden of determining the reliability of Internet sources is placed upon the student. The lack of editorial review results in content that appears reliable to the student, but might be false, unsubstantiated, or mere opinion. The source a student uses may depend more on accessibility, intelligibility, and cognitive load than on reliability (Ginns and Leppink 2019). The availability of pithy Internet sources might draw students away from more reliable primary sources that are difficult to access or understand. Research is needed to quantify the relationship between the accessibility/intelligibility of source materials, the decision to use these materials, and performance measures of learning outcomes. Understanding the relationship between cognitive and metacognitive processes in critical thinking will inform educators on how to guide undergraduates who are sifting through a variety of information sources. Several metacognitive factors are known to influence critical thinking. In Tversky and Kahneman’s (1973) seminal work, individuals overestimated the probable outcome of unlikely events if the outcome was highly desired (e.g., the state lottery). It is now widely recognized that two systems contribute to decision making (Stanovich and West 2000). System 1 automatically processes incoming information without placing too large a demand on attention. System 2 requires effort, places significant demands on attention, and typically serves deliberated decisions. Individuals are more likely to provide an incorrect answer that is immediately provided by System 1 than spend effort to arrive at the correct solution provided by System 2. Complex mental calculations like 6543 x 438 are more likely to involve System 2, which is typically engaged when System 1 fails to produce an automatic solution to a problem. However, a classic cognitive reflection test (CRT) demonstrates that System 1 may still have influence over judgments when System 2 is engaged. Take the following for example: “A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost? _____ cents.” The correct answer is 5 cents, but people usually rush to the more intuitive answer of 10 cents. Individuals are more likely to provide an incorrect answer that is immediately provided by System 1 than spend effort to arrive at the correct solution provided by System 2. Even when participants provide correct answers, evidence suggests the intuitive response was considered first (Frederick 2005).

The relationship between cognitive System 1 and 2 may be particularly relevant for undergraduate students who are challenged with difficult texts. In recent years, STEM majors are being asked to read the primary scientific literature either for class or to support undergraduate research. In addition to the facts that find their way into textbooks, students need to be acculturated to scientific thinking and the iterative nature of research. One of the reasons these texts are challenging is because they contain domain-specific vocabulary and jargon. Providing annotations or paraphrasing journal articles are two scaffolding methods that have helped students understand scientific literature (Falk et al. 2008; Kararo and McCartney 2019). Nevertheless, there is much to learn about how cognitive System 1 and 2 are engaged when reading difficult texts. Understanding how these cognitive and metacognitive factors influence the reading of difficult texts might be useful to educators.

Fluency is the degree to which information can be processed automatically. Fluently processed stimuli are presumably processed without effort. Conversely, disfluently processed stimuli require more effort to process. Processing fluency has been shown to influence judgments in various modalities, including semantic priming (e.g., Begg, Anas, and Farinacci 1992), visual clarity (e.g., Reber and Schwarz 1999), and phonological priming (e.g., McGlone and Tofighbakhsh 2000). The most researched variety of fluency, physical perceptual fluency, varies the saliency or discriminability of the target, including manipulating the legibility of font (Alter and Oppenheimer 2009). In these experiments, intelligible stimuli are judged to be more truthful (Schwarz 2004) and more likeable (Zajonc 1968) than less legible stimuli, and people are more confident about choices under intelligible conditions (Kelley and Lindsay 1993; Koriat 1993).

Recent studies suggest the effects of fluency on decision making are not entirely clear. Besken and Mulligan (2014) presented participants with intact or degraded auditory stimuli. Even though participants perceived their ability was worse in disfluent conditions, performance was paradoxically better when compared to fluent conditions. Backward masking studies have also provided evidence of a double dissociation between fluency and performance (Hirshman, Trembath and Mulligan 1994; Mulligan and Lozito 2004; Nairne 1988). And another group of studies found enhanced recall for words that were made disfluent by transposing letters or removing letters from words (Begg et al. 1991; Kinoshita 1989; Mulligan 2002; Nairne and Widner 1988). In these cases, disfluency may function as a signal that motivates the perceiver to further scrutinize the target, which may lead to improved performance (Alter et al. 2007).

Game-based learning systems have been defined as tabletop or digital ecosystems that respond to player choices in a manner that shapes behavior or fosters learning outcomes toward an explicit goal (All et al. 2015, 2016). Recently, game-based learning systems are being designed to foster critical thinking. For example, “Mission US” guides students through critical epochs in American history while teaching evidence-based reasoning (Mission US 2019). However, there are very few studies on either critical thinking in games or how fluency affects critical thinking in game-based learning systems (e.g., Cicchino 2015; Eseryel et al. 2014).

Understanding the relationship between fluency and critical thinking should inform educators, game designers, and educational technologists about how to design games for critical thinking. According to a naïve theory of fluency, intelligible and reliable sources of information should be preferred by students, judged as more useful, and result in improved performance on assessments of critical thinking. Theories that postulate a double dissociation between fluency and performance make different predictions. Specifically, relative to fluent conditions, unintelligible information or unreliable sources should result in decreased confidence and increased performance. To determine which of these theories predicts performance in game-based learning systems, the intelligibility of information and the reliability of its source were manipulated in a simple critical thinking game. This game-like experience was comprised of elements one might find in a critical thinking game. However, the experiment required a stripped-down, reductionist model of a game to avoid confounding variables. Self-reports of confidence were compared to performance, and a double dissociation between the two measures was predicted. When either the information presented in the game was unintelligible or its source was unreliable, it was predicted that confidence would be lower and performance would be improved. When the information was intelligible or its source was reliable, confidence was expected to be higher and performance would decline.

Experiment 1

The purpose of Experiment 1 was to determine if participants could make an association between the reported reliability of a source and its accuracy. For the purposes of this experiment, source reliability is defined as an observer’s perception of the probability that the source is accurate. In classic definitions, source reliability refers to the actual probability that the source is accurate (e.g., Bovens and Hartman 2003). Kahneman and Tversky would describe both of these situations as decision making under uncertainty, where probability is never truly known by the observer. However, in this experiment, participants will be informed about the relative accuracy associated with each source. Thus, source reliability in this experiment can be considered equivalent to decision making under conditions of relative certainty. In the reliable-source condition, correct answers were more frequently associated with peer-reviewed sources. According to naïve models of fluency, participants were expected to use the source of information as a cue for the correct answer. In the unreliable-source condition, correct answers were pseudorandomly associated with each type of source 25% of the time. Performance in this condition was expected to be relatively worse because the cues did not reliably predict the correct answer.

Methods

Participants

York College is one of eleven senior colleges in the City University of New York (CUNY) consortium. The student body is comprised of just over 8,500 students who are pursuing over 70 B.A. and B.S. degrees. York is a Minority Serving Institution, and approximately 95% of students are supported by the state Tuition Assistance Program. Undergraduate students enrolled in lower division behavioral and social science courses (N = 78) were recruited from the York College Research Subject Pool using a cloud-based participant management software system (Sona Systems, Bethesda, MD). Participants received course credit for participation. Demographic data were not explicitly collected for this study, but the age (18.6 years old), gender (65.3% of the students identify as women, and 34.7% identified as men), and ethnic identity of the sample are thought to reflect the general population of the college (42.9% Black, 21.8% Hispanic, 27.1% Asian or Pacific Islander, 1% Native American, and 7.2% Caucasian). All policies and procedures comply with the APA ethical standards and were approved by the York College Internal Review Board. Participants were equally and randomly distributed into the reliable-source or unreliable-source conditions.

Apparatus

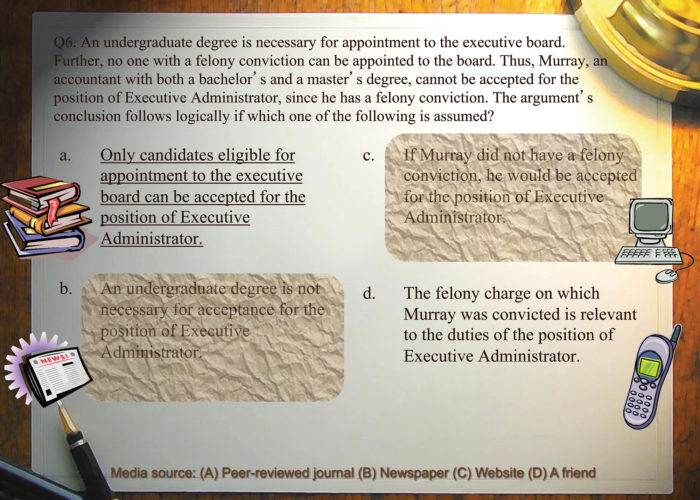

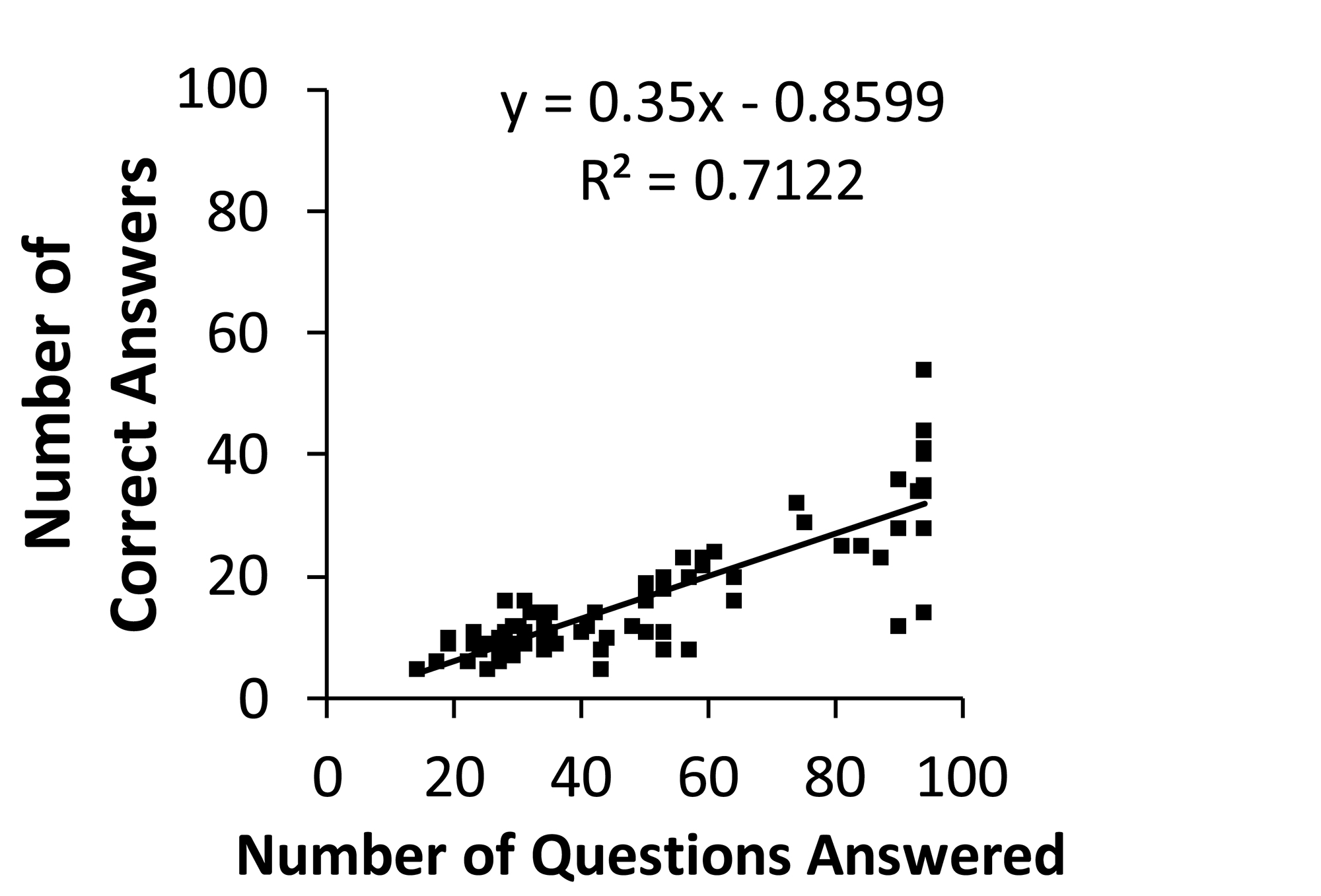

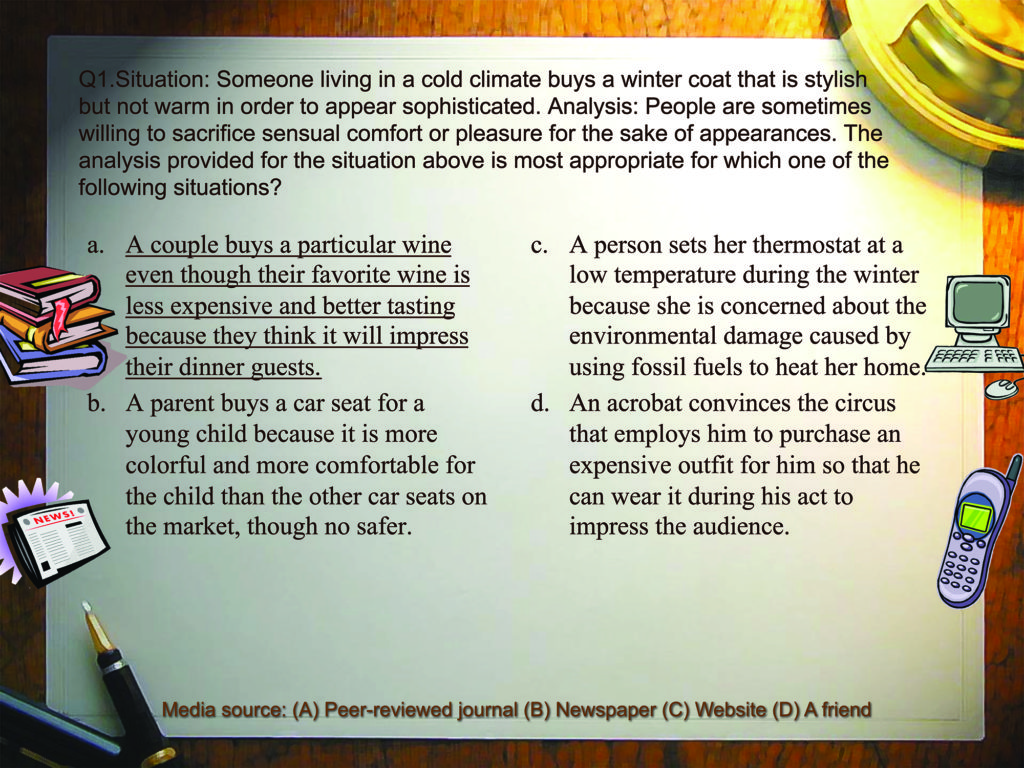

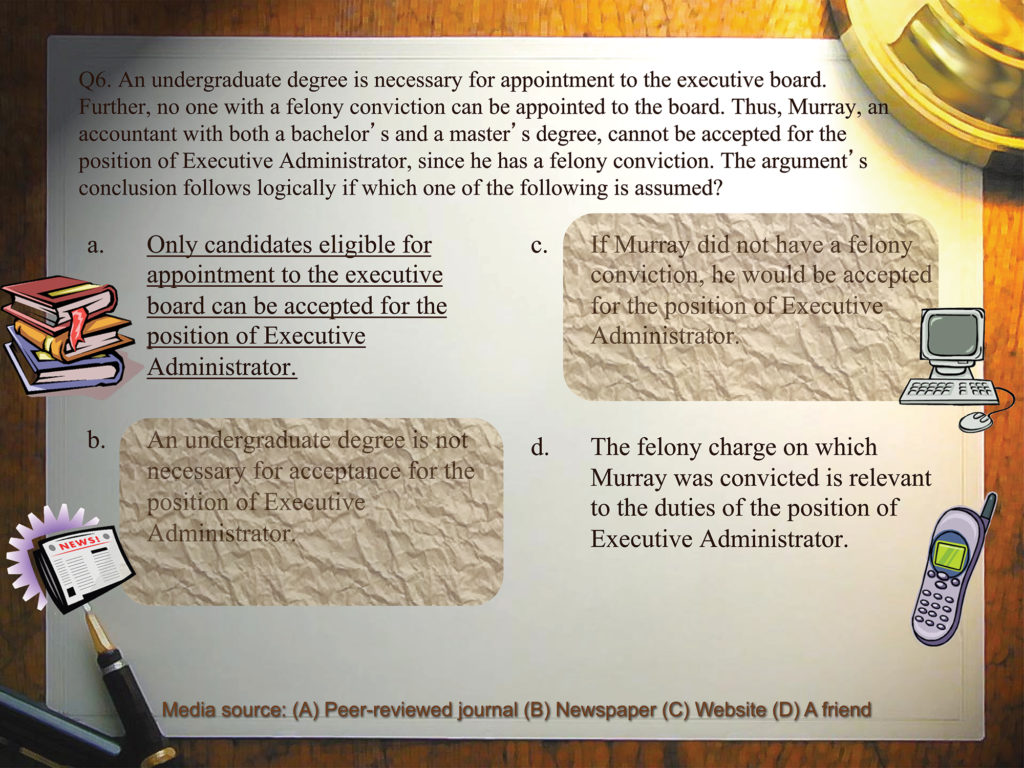

Participants were presented with a simple game where they selected the best of four possible solutions to a question in a four-alternative, forced-choice paradigm (4-AFC). The technology and the number game mechanics were intentionally minimized to reduce the number of potential confounding variables. Ninety-four questions and answers were derived from the Logical Reasoning portion of the 2007 Law School Admissions Test (LSAT). Questions and possible answers were presented simultaneously on a 15” MacBook Pro laptop computer (Apple, Cupertino, CA) using PowerPoint (Microsoft Corp., Redmond, WA) (Figure 1).

Four different icons accompanied each of the four possible answers (book, newspaper, computer, and phone). Participants were told the book icon represented a peer-reviewed source that was “highly reliable.” The newspaper icon represented a “somewhat reliable” periodical that was not peer-reviewed. The computer icon represented a “somewhat unreliable” Internet source that was not peer-reviewed. The phone icon represented “highly unreliable” information from a friend. The book, newspaper, computer, and phone icons were reliably paired with the correct answer 50%, 25%, 12.5% and 12.5% of the time, respectively. Participants indicated their responses (A through D) using a score sheet and pencil. Participants were not allowed to correct answers from previous trials. After indicating their choice, participants could advance to the next slide where the correct answer was underlined on the screen. Participants were monitored to prevent cheating.

Procedures

After providing informed consent, participants were directed to one of three rooms measuring approximately 2 m2. Each room was isolated and quiet, with only a laptop, desk, and chair. Participants were read instructions from a script, and they could ask questions for clarification. A sample question and answer were presented as a demonstration. Participants were instructed to correctly answer as many questions as they could within one hour. At the conclusion of the experiment, participants were debriefed about the general nature of the experiment using a script.

Discussion

Experiment 1 was conducted to determine whether students refer to the source of information when confronted with difficult content (Detailed results are presented in Appendix B). Participants did not perform exceptionally well, which was expected given the difficulty of the task. However, they did perform better than chance. More importantly, there was no evidence that participants used the icons to guide their decisions. These results are less consistent with a naïve model of fluency and more consistent with dual-process theories (e.g., Petty and Cacioppo 1986; Kahneman 2003; Kruglanski and Van Lange 2012). Specifically, the difficulty of the content in Experiment 1 may have led participants to rely upon the deliberate cognitive System 2 rather than the more intuitive cognitive System 1, which would have likely revealed the correct answers on the assessment.

Considering that participants were told which sources were the most reliable, it is not unreasonable to expect that participants in the reliable-source condition could have achieved scores of at least 50% correct on average, and such a change should be easy to observe even with very few participants. Participants were too focused on the difficulty of the task to take advantage of the icons that reliably indicated the correct answers. These results suggest that, when faced with challenging texts, undergraduate students may ignore the source of the information. Instructors in higher education should use disciplinary literacy strategies such as sourcing to lead students away from random internet sources and toward more authoritative, peer-reviewed sources (Reisman 2012). Although this experiment uses a simplified model of game-based learning systems, the results imply that students who are engaged in more complicated game-based learning systems might also be too distracted by the difficulty of the content to use the source of information as a guide to the accuracy of the content.

Experiment 2

Additional participants were included in Experiment 2 to determine if increased statistical power would reveal a significant advantage for participants who were presented with reliable cues. All other methods were identical to Experiment 1. An additional assessment was used to measure the confidence of participants. Confidence and performance are correlated on a variety of tasks, including critical thinking (Bandura 1977; Ericsson, Krampe, and Tesch-Romen 1993; Harter 1978; Kuhl 1992; Nicholls 1984). Measurements of confidence and other affective assessments have provided insight as to why critical thinking varies between automatic System 1 and effortful System 2 cognitive processes (Alter and Oppenheimer 2009). This experiment assessed whether there was a double dissociation between the conditions of the independent variable (reliable-source versus unreliable-source) and the dependent variables (performance and confidence). According to the naïve model of fluency, participants who took advantage of cuing in the reliable-source condition were expected to have high confidence and perform better than students in the unreliable-source condition, who were expected to have low confidence and poor performance because of the lack of reliable cuing. By contrast, more recent models of fluency predicted confidence and performance would be inversely correlated.

Methods

Participants

Data was collected from 386 additional participants. 193 participants were randomly assigned to the reliable- and unreliable-source conditions, respectively. Participants in the reliable-source group were presented with icons that reliably predicted correct and incorrect answers with the same probability as those in Experiment 1. Participants in the unreliable-source condition were presented with icons that were pseudorandomly associated with correct answers. The correct answer was paired with each icon 25% of the time.

Apparatus

In addition to the critical thinking assessment used in Experiment 1, participants were asked to complete a self-assessment of confidence. The assessment was derived from several validated measures of academic self-efficacy (Bandura 1977; Honicke and Broadbent 2016; Pintrich and DeGroot 1990; Robbins et al. 2004), which is defined as a learner’s assessment of their ability to achieve academic goals (Elias and MacDonald, 2007). Rather than make predictive statements about academic success, participants reflected on their performance of the task. Five questions were counterbalanced to measure split-half reliability (e.g., “Was the game easy?” versus “Was the game difficult?”), yielding a total of ten questions. Participants indicated their responses to each question using a 5-point Likert scale {2 = agree; 1 = somewhat agree; 0 = neutral; -1 = somewhat disagree; and -2 = disagree}. The questions were presented in pseudorandom order. The complete list of questions appears in Appendix A.

Procedures

All procedures were identical to those of Experiment 1 except participants were given additional instructions for completing the confidence assessment, which were read by the experimenters from a script. Participants were given 10 minutes to complete the 10-item assessment, which proved to be a sufficient amount of time.

Discussion

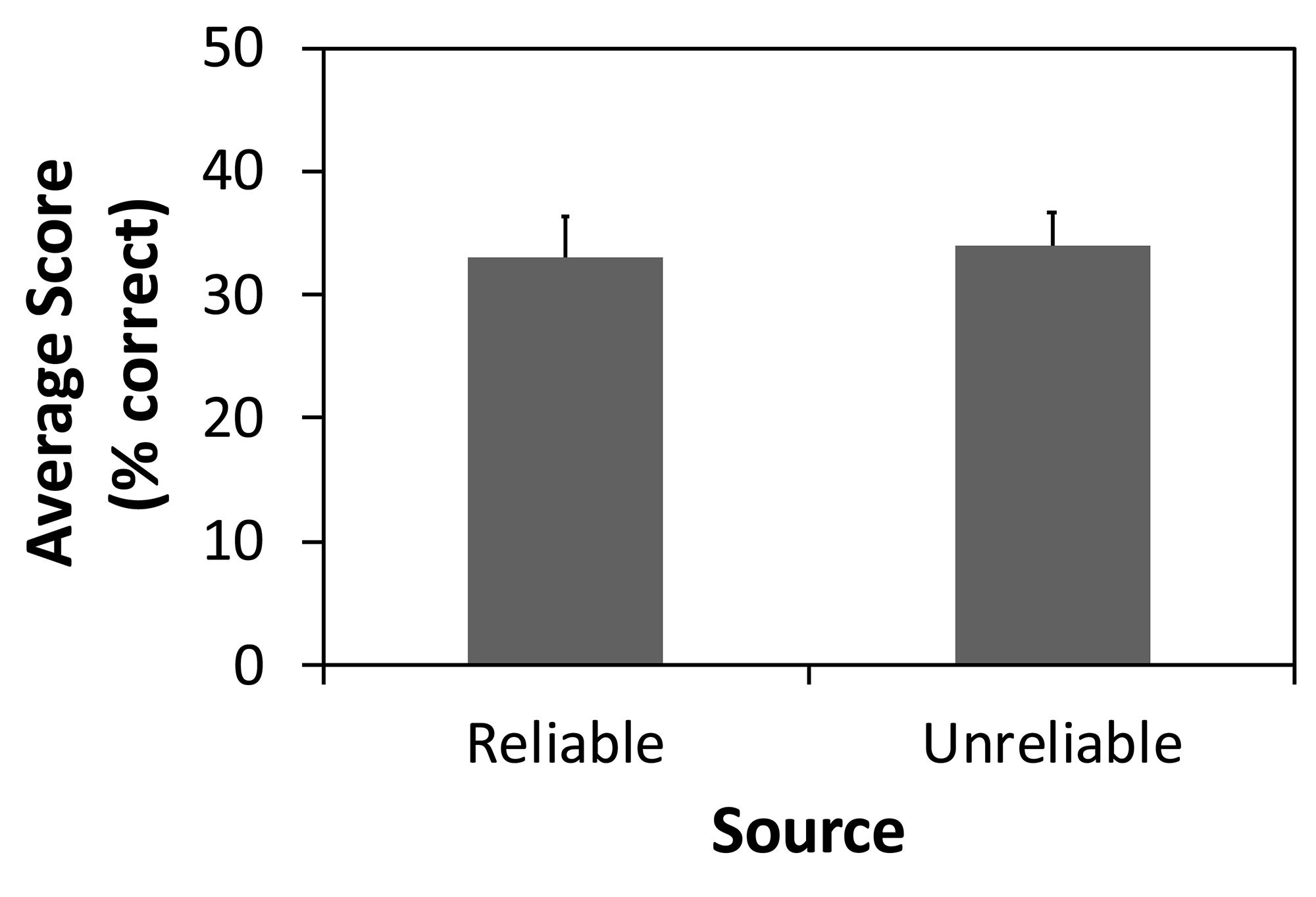

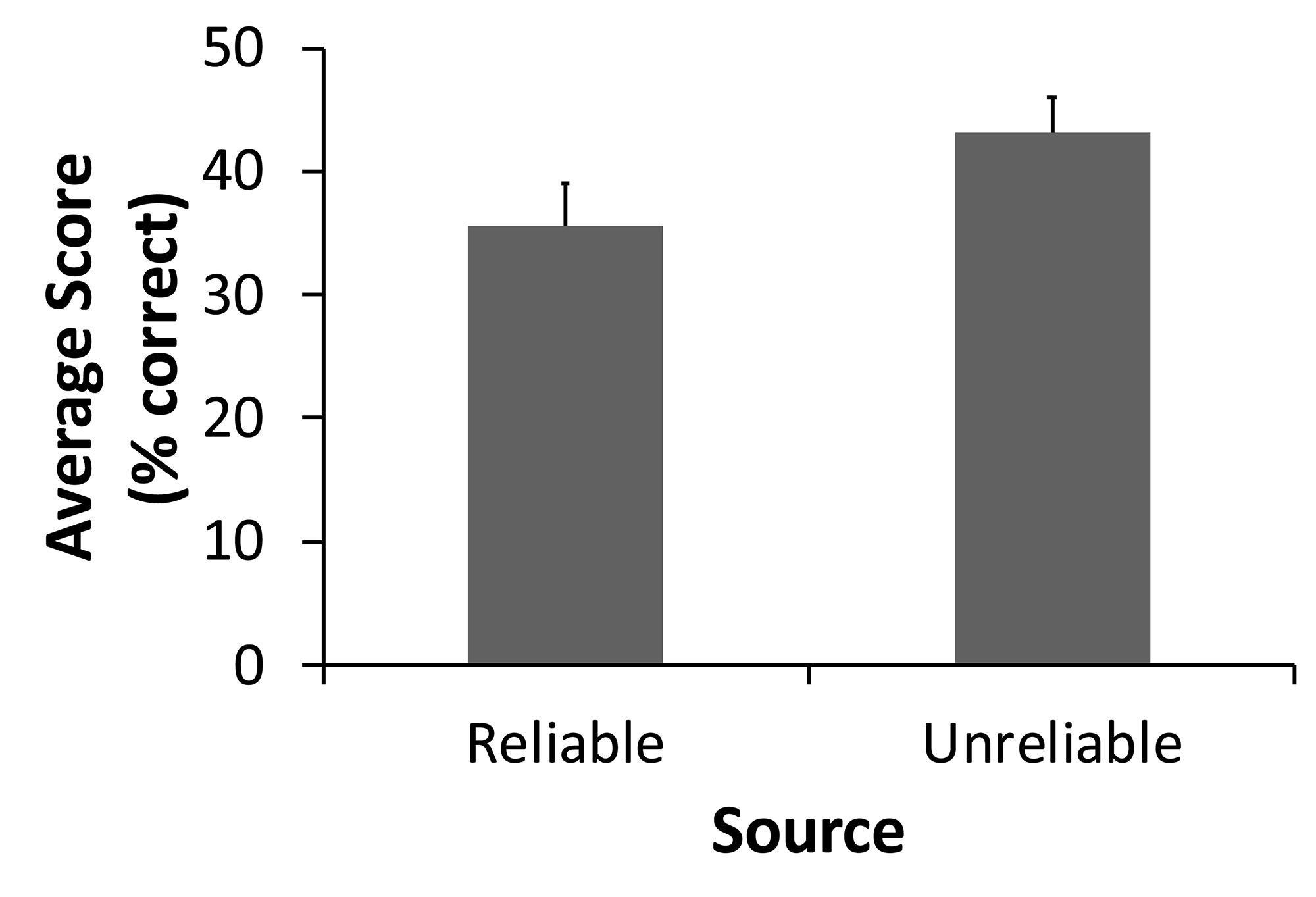

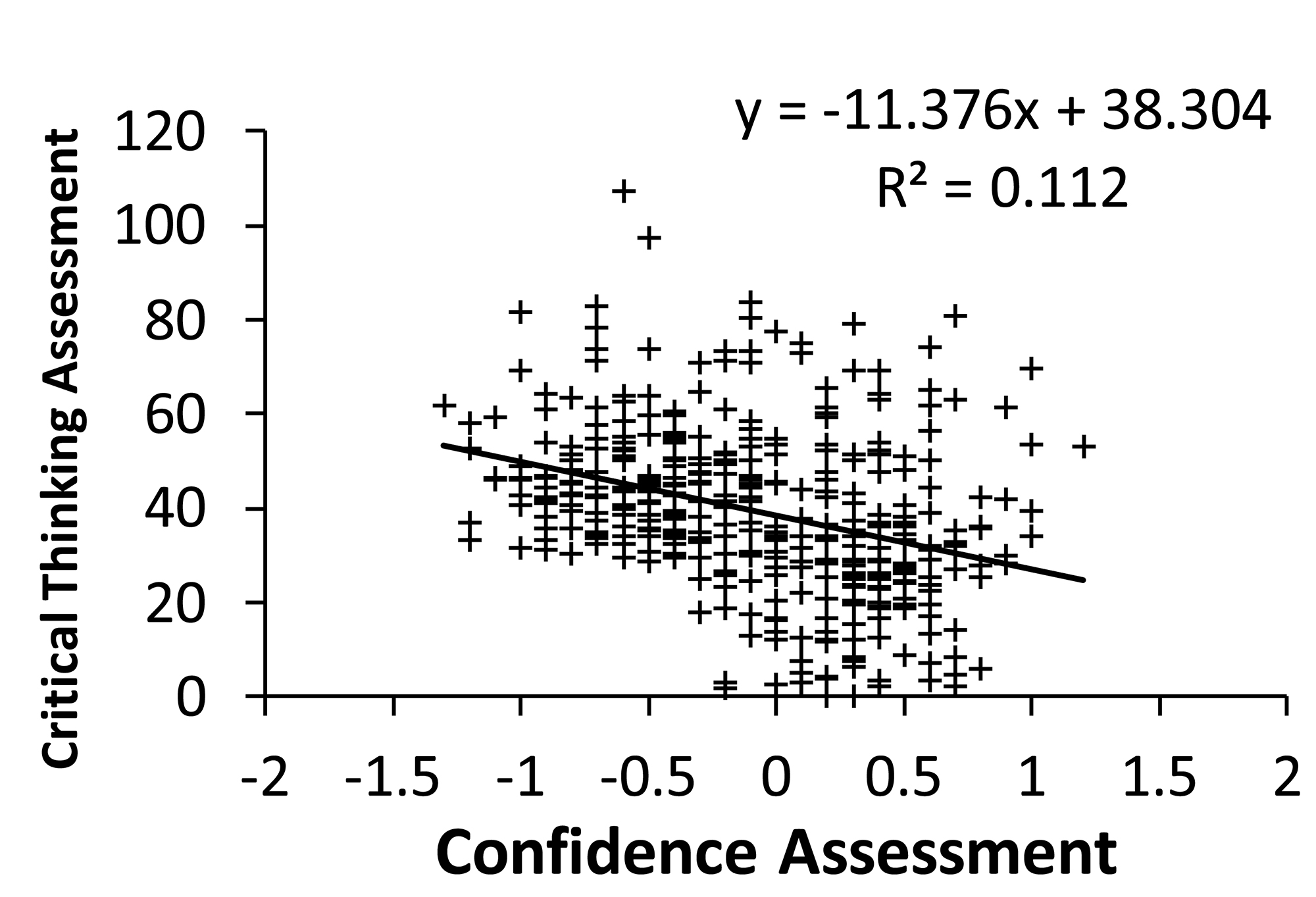

Experiment 2 included assessments of confidence to determine whether there was a double dissociation between each condition of the independent variable (reliable- and unreliable-source conditions) and the two dependent variables (confidence and performance) (Detailed results are presented in Appendix B). Participants in both conditions performed poorly, but each group performed better than chance. There were also no speed-accuracy trade-offs that could account for differences between groups. Note that reaction time data was not collected explicitly. Rather, it was inferred based on the total number of questions completed in one hour. Therefore, no conclusions about changes in reaction-time data over time can be made. Data indicated that participants in the reliable-source condition did not use the provided iconography to guide them to the correct answer. Surprisingly, participants in the unreliable-source group outperformed participants in the reliable-source condition by a slight margin. Participants in the unreliable-source condition may have initially referred to the icons but, having discovered their lack of reliability, focused more intently upon the content of the questions.

Confidence ratings for the reliable-source condition were significantly higher than for the unreliable-source condition. Conversely, performance for the unreliable-source condition was better than the reliable-source condition. Consequently, there was a double dissociation between the levels of the independent variable and the two dependent variables. Specifically, despite having lower confidence, participants in the unreliable-source condition performed better on the critical thinking assessment than those in the reliable-source condition.

Participants who were faced with a difficult task appeared to rely upon the more deliberate cognitive system (System 2) even when the more intuitive system (System 1) might have provided a more accurate answer. Even though the icons in the reliable-source condition were more trustworthy than those in the unreliable-source condition, the icons were never 100% reliable. Participants may have observed instances where the icons failed to predict the correct answer and subsequently discounted the validity of the icons. These results may explain why students often ignore the source of information when challenged by difficult content in the primary literature. If students are unclear about the reliability of the source, they may ignore the source and focus on the content. Participants in the unreliable-source condition may have outperformed participants in the reliable-source condition because it may have taken them less time to recognize that the icons were not 100% reliable. Participants in the reliable-source condition may have used the icons until they proved less than 100% reliable, and then shifted their attention to the content.

The double dissociation between the source reliability and the dependent variables (confidence and performance) provides evidence in support of dual-process theory. Traditionally, dual-process experiments manipulate task quality or difficulty as an independent variable. Task difficulty was constant in this experiment. However, the two conditions had differential effects on confidence and performance. It is speculated that when source cuing is reliable, participants are more confident and rely upon cognitive System 1, which provides fewer correct conclusions. Conversely, when source cuing is unreliable, participants lack confidence and rely primarily upon cognitive System 2, which provides more correct answers.

Experiment 3

Experiments 1 and 2 manipulated the reliability of source materials while the intelligibility of the content was held constant. In game-based learning, students may be challenged with content that is easy or difficult to understand. There may be interaction effects between comprehension of the content and identification of reliable sources. Consequently, the intelligibility of the content was manipulated in Experiment 3. Intelligibility can be manipulated using a variety of techniques, including perceptual, cognitive, and linguistic methods (Begg, Anas and Farinacci 1992; McGlone and Tofighbakhsh 2000; Reber and Schwartz 1999). In this experiment, perceptual intelligibility was manipulated by degrading the text. Dual-process theories of fluency predicted that degrading the intelligibility of the text would engage cognitive System 2 and improve performance relative to conditions where text was not degraded.

Methods

Participants

Participants were recruited and randomly assigned to one of four possible groups (N = 400): (1) reliable-source/intelligible-content; (2) reliable-source/unintelligible-content; (3) unreliable-source/intelligible-content; and (4) unreliable-source/unintelligible-content. Participants in the reliable-source conditions were presented with icons that predicted correct answers with the same probability as those in Experiments 1 and 2. Participants in the unreliable-source conditions were presented with icons that were pseudorandomly associated with correct answers; the correct answer was paired with each icon 25% of the time. Participants in the intelligible-content conditions were presented with unmasked, high-contrast text, and participants in the unintelligible-content conditions were presented with masked, low-contrast text. Participants did not participate in the confidence assessment because comparing source reliability, content intelligibility, and confidence scores in a three-way ANOVA would be difficult, if not impossible, to interpret.

Apparatus and procedures

All materials and procedures were identical to the previous experiments with the exception that some conditions contained degraded text. For conditions where the contrast of the text was degraded, two of the four possible answers were presented at random on a low-luminance background (Figure 2).

This background effectively reduced the contrast of the text to near-threshold levels. The contrast range of laptop computers varies greatly with viewing angle and distance from the center of the screen. Participants viewed the screen from a comfortable angle, and they were allowed to adjust the screen to insure they could read the low-contrast text. The maximum luminance from the center to the edge of the screen varied from approximately 260 to 203 cd/m2. Weber contrast ratios (LMAX-LMIN/LBACKGROUND) for high- and low-contrast text were 90% and 10%, respectively [High-contrast text: background = 200 cd/m2; text = 20 cd/m2. Low-contrast text: background = 100 cd/m2; text = 90 cd/m2]. The background field was also altered to look like crumpled paper using a filter in PowerPoint, which acted as a high-frequency mask. The text was always above the threshold for detectability. However, participants reported that the text was more difficult to read than unaltered text. Note that the contrast of the text in Figure 2 is greater than that of the actual stimulus.

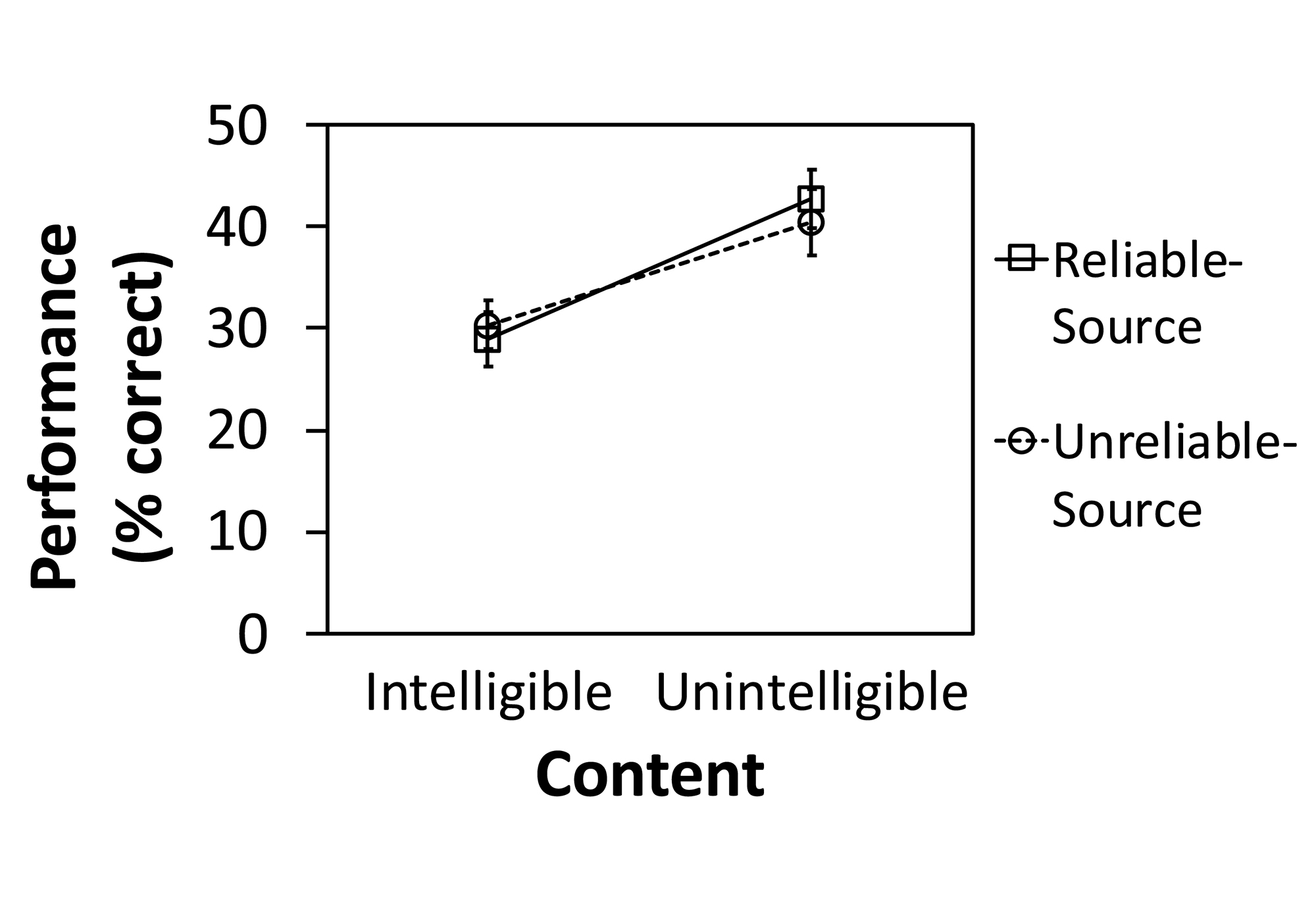

Discussion

The results of Experiment 3 indicate that content intelligibility affects the performance of students participating in critical thinking assessments (Detailed results are presented in Appendix B). Performance for unintelligible-content was better than for intelligible-content. The degraded text in the unintelligible-content condition may have demanded closer inspection, resulting in better performance. Similar to Experiments 1 and 2, participants failed to capitalize on the information provided by the icons in the reliable-source conditions. Even though there was a significant effect for the interaction of source reliability and content intelligibility, the effect size was modest and post-hoc tests did not find a difference between source conditions across both levels of the content factor. Strictly interpreted, the interaction effect suggests that performance is further improved under unintelligible-content conditions when the source is reliable. Degrading the stimulus most likely engages cognitive System 2, which improves overall performance. Additionally, degrading the stimulus may also slow participants to the point where they consider the meaning of the icons and use these clues to come to a correct decision. Nevertheless, it difficult to claim additional benefits for the reliable-source condition considering (1) the modest effect size for the interaction and (2) the fact that content intelligibility did not significantly vary across the levels of source reliability factor. The effect of content unintelligibility seems to engage System 2 to the point where source reliability does not make a significant difference, thus echoing the results and interpretations of Experiments 1 and 2. When challenged with difficult texts, participants appeared to ignore the reliability of the source type, depending mostly upon the content to make decisions.

The more difficult the content was to read, the more likely students were to pay close attention and answer correctly. Performance was further enhanced when the already difficult task was made more difficult by degrading the text. There may be a continuum within System 2, whereby performance may improve the more scrutiny a target gets. More research must be conducted to determine if System 2 participates in a gradient-like fashion, or if it is engaged using a winner-take-all mechanism that suppresses the output of System 1. These results may have serious implications for educators and designers of game-based learning systems, who may label sources of information to guide instruction, practice, or assessment.

General Discussion

This study manipulated content intelligibility and source reliability in a simple critical thinking game and found differential effects for confidence and performance. This double dissociation provides evidence for the dual-process model of cognition originally proposed by Tversky and Kahneman (1973). The results also contradict naïve theories of fluency that predict confidence and performance are correlated.

Processing fluency is a metacognitive factor that has a pronounced effect on undergraduates’ critical thinking and their decision to consider sources of information. Participants were informed that certain icons would provide the correct answer 50% of the time, yet performance indicated that participants were not using this information to guide decisions. Even when content intelligibility was not manipulated, the difficulty of the critical thinking game was sufficient enough to divert attention away from the icons and toward the content itself. These results support a dual-process model of cognition (e.g., Petty and Cacioppo 1986; Kruglanski and Van Lange 2012; Kahneman 2003), whereby decision making is influenced by metacognitive factors (Alter and Oppenheimer 2009).

A double dissociation between confidence and performance suggests that two cognitive systems, a faster intuitive system and a slower deliberative system, can be distinguished in a game-based learning environment. Overall, confidence and performance were inversely correlated. Compared to fluent conditions, when the content was unintelligible or the source was unreliable, confidence was relatively lower and performance was relatively better. Other studies have provided evidence of a double dissociation between fluency and performance (Hirshman, Trembath and Mulligan 1994; Mulligan and Lozito 2004; Nairne 1988; Pansky and Goldsmith 2014). However, the current study is most similar to reports of a double dissociation between confidence and decision making in audition, where participants made more correct judgments for degraded stimuli even though they had less confidence than participants who rated intact stimuli (Besken and Mulligan 2014). Together, these studies provide evidence of two independent processes: one that supports intuitive decision making and a second that supports deliberative decision making.

In lieu of an observational study of game play, this laboratory experiment was conducted to better control internal validity. However, the limited range of difficulty in the critical thinking game may have affected the interpretation of the results. The difficulty of the questions could have served as an independent variable, ranging from easy to difficult. If this were the case, the easy and difficult questions should have elicited fluent and disfluent processing in the observer, respectively. However, because the focus of the study was on metacognitive factors in the presence of difficult texts, only difficult questions were used. Nevertheless, even when the content was difficult, as is often the case when undergraduates read primary literature, further manipulations of content intelligibility affected performance. Degrading the text to create a perceptually disfluent condition increased performance regardless of the reliability of the source of the material. This result suggests that participants who had already discounted the source of information were paying even closer attention to the content. Alternately, participants who were using the icons to solve problems may have switched their attention to the content because of the manipulation to the text. These results are similar to studies where transposing letters or removing letters resulted in improved word recall (Begg et al. 1991; Kinoshita 1989; Mulligan 2002; Nairne and Widner 1988). Unintelligible text creates a disfluent condition that may function as a signal to motivate the perceiver to further scrutinize the target, which may lead to improved performance (Alter et al. 2007).

It remains to be seen whether this controlled laboratory study will generalize with respect to more complicated game-based learning experiences in naturalistic settings. The content and stimulus presentation for this study were initially developed as a prototype for a digital game to help students practice critical thinking skills. This study was motivated largely by the results from pilot studies that were difficult to interpret. While the information presented in the critical thinking game was as difficult as the primary source materials undergraduates encounter in many disciplines, the presentation of the sources could have been more realistic. Students are likely to encounter information from various sources via a web browser. To improve external validity, future studies could present discipline-specific content in a web-based game that mimics various sources of information students are likely to encounter (e.g., Wikipedia, peer-reviewed journals, the popular scientific press, and web blogs). The more realistic condition could then be compared to an even more elaborate game-based learning system, where students are challenged to solve scientific mysteries using various sources of information.

Additionally, the study may be limited because the participants were not sufficiently described. The study hypothesis was not directed at the effect of student demographics on critical thinking. Subsequently, detailed demographics were not collected to evaluate the influence of gender identity, ethnicity, social-economic status, first-gen status, GPA, course load, and other factors. Study participants in the research pool are varied and randomly assigned. Consequently, the conclusions are likely to generalize with respect to similar populations. Any questions about differences along one of these axes should be addressed in a subsequent study where the demographic of choice serves as the independent variable to be manipulated.

Another challenge comes from attempting to generalize our results with respect to the complex situations presented in game-based learning environments. Unlike controlled laboratory experiments, many games challenge students with multiple sources of information along various timelines. The unpredictability of games is partially what makes them so engaging. However, the interaction between cognitive and metacognitive factors in critical thinking would be difficult to study with more than a few independent variables. Fortunately, the most important effects were replicated in these three experiments, and thus future experiments will attempt additional replication using more game mechanics in a classroom setting. If these efforts are successful, the intent is to move the experiment to a fully online format, where students can practice domain-specific critical thinking skills on demand.

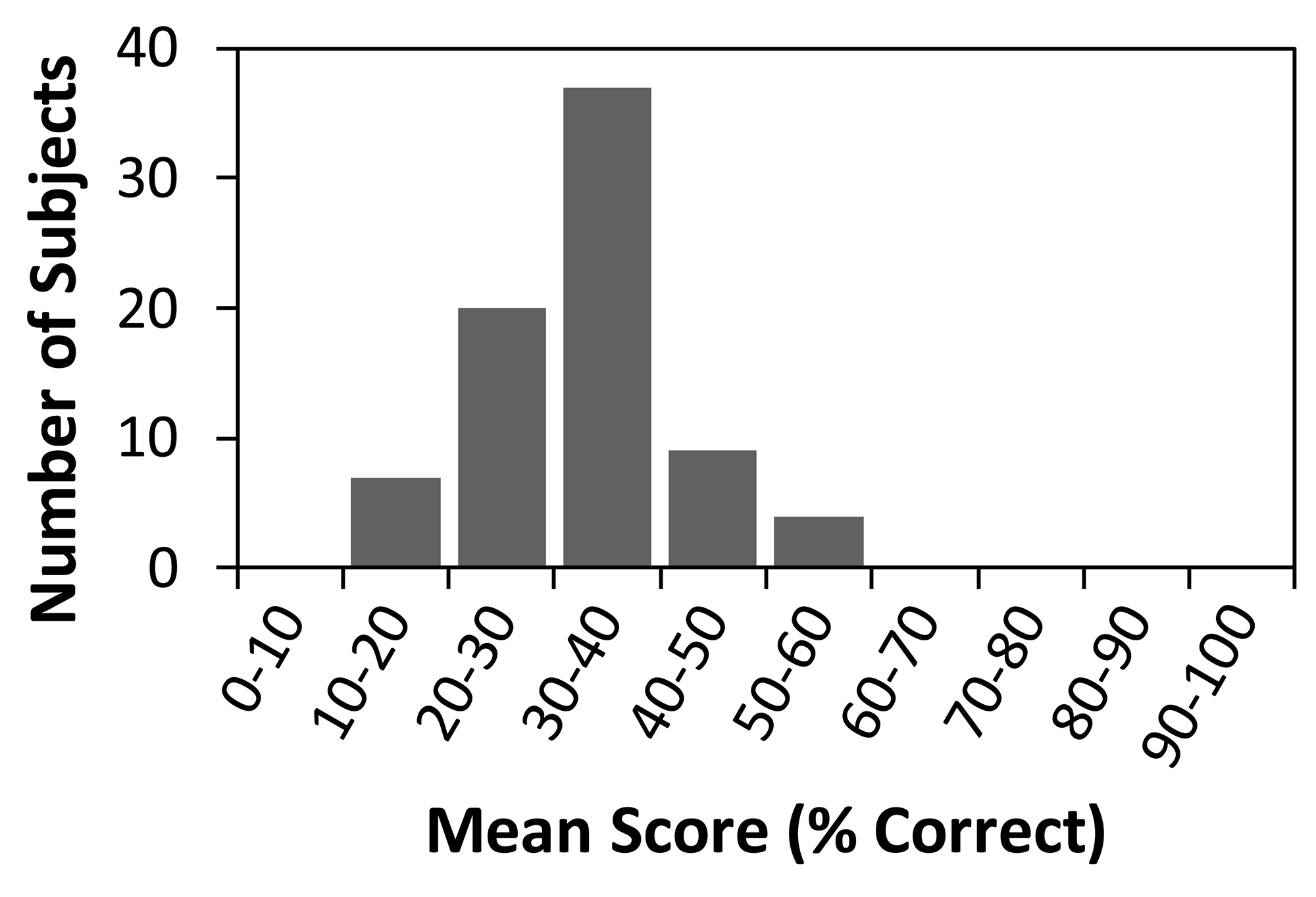

Task difficulty may be a potential limitation of the study. Undergraduate participants were asked to read texts and solve problems derived from the LSAT, which is far beyond their experience and training. Performance was below 50% correct for most participants. Even so, the results indicate that students performed significantly above chance, and the effect size was quite large despite the difficulty of the reading and the challenges to critical thinking. Ultimately, however, generalization of the conclusions may be limited to tasks with equivalent difficulty, and the conclusions may not generalize with respect to easier tasks.

This study has major implications for game-based learning. Digital learning environments present information through various channels and multiple sensory modalities (i.e., vision, hearing, etc.). Monitoring each channel can be as important as monitoring the information itself. For example, navigation cues in an open-world adventure game might be presented through audio information, capturing attention immediately because it is salient relative to the visual information presented via a potentially crowded heads-up display. If the information presented in a channel is complicated or competes with other information, the learner might ignore the source of information altogether. Imagine a scene where helpful advice originates from an angelic character whispering in a player’s ear, and bad advice is touted by a devilish character in the other ear. If the messages get too complicated, players might discount which channel the message is coming from and mistakenly follow the bad advice of the devilish character. This study also addresses the foundational question about how to make better games for learning. Game design and psychology have accepted constructs and vocabulary that do not necessarily align with each other. The two fields must work to understand how game design concepts such as “meaningful choice” align with psychological constructs including critical thinking and decision making. Aligning the vocabulary and constructs from each camp will not only improve how we measure the impact of games but, more importantly, how we can construct better games for learning.

This research also has broader implications for higher education in general. The results provide a possible explanation for successful approaches that foster critical thinking by teaching a step-wise process to problem solving. For example, “Mission US” (Mission US, 2019) is modeled after a successful curriculum, Reading Like a Historian, that explicitly teaches disciplinary literacy to high school students (Reisman 2012). To evaluate texts, students engage in four discrete disciplinary literacy strategies that encourage critical thinking: (1) contextualization, placing a document in temporal and geographical context; (2) sourcing, identifying the source and purpose of a document; (3) close reading, carefully considering the author’s use of language and word choice; and (4) corroboration, comparing multiple sources against each other. Similarly, academics have used variations of disciplinary literacy to guide students through primary literature (Hoskins 2008, 2010; Hoskins et al. 2011; Stevens and Hoskins 2014). In the C.R.E.A.T.E. method (Consider, Read, Elucidate the hypotheses, Analyze and interpret the data, and Think of the next Experiment), biology students analyze a series of papers from a particular lab, appreciate the evolution of the research program, and use other pedagogical tools to understand the material (e.g., concept mapping, sketching, visualization, transformation of data, creative experimental design). Both of these approaches succeed by disrupting the more intuitive cognitive System 1, which might lead to erroneous thinking, and engaging the more deliberative cognitive System 2, which supports evidence-based decision making and critical thinking. The current experiment provides evidence to support pedagogical methods such as disciplinary literacy and C.R.E.A.T.E., and our results suggest a cognitive mechanism that explains both the positive and negative outcomes that occur when students are engaged in reading difficult texts. These pedagogic approaches are particularly significant in today’s media landscape, where students are exposed to complicated ideas from legitimate and dubious sources alike. For example, a student might encounter a challenging article from a questionable source on social media that argues against vaccination. Without proper training in disciplinary literacy, the student might fail to consider the source of information and come to the inaccurate conclusion that all vaccines are harmful. Consequently, by training students in disciplinary literacy, educators may inoculate students against the cognitive biases that might lead to erroneous decision making.