Introduction

Researchers and enthusiastic practitioners have long been arguing for the effectiveness of digital games as a means for teaching subject-specific skills while also motivating and engaging students (Gee 2008; Annetta 2008; Squire and Jenkins 2003). As games require players solve complex problems, work collaboratively, and communicate with others in both online environments and the physical spaces where gameplay takes place, they are said to support students’ development of twenty-first century competencies (Spires 2015).

Recognizing the teacher’s role in designing and facilitating learning environments that support digital game-based learning (DGBL), including adapting content to suit the needs of diverse learners, is a critical component of effective DGBL. As McCall makes clear, “by itself…a…game is not a sufficient learning tool. Rather, successful game-based lessons are the product of well-designed environments” (2011, 61). Chee, in his book on using digital games in education, argues

It is vital to understand that games do not “work” or “not work” in classrooms in and of themselves. They possess no causal agency. The efficacy of games for learning depends largely upon teachers’ capacity to leverage games effectively as learning tools and on students’ willingness to engage in gameplay and other pedagogical activities—such as dialogic interactions for meaning making—so that game use in the curriculum can be rendered effective for learning. (2016, 4)

On this view, the focus shifts from the games, game systems, and game content to “what teachers need to know” pedagogically (Mishra and Koehler 2006, 1018), including how to create space for digital games in the curriculum, organize classroom activities around the use of games, support students during both gameplay and their engagement with DGBL activities (Sandford et al. 2006; Allsop and Jessel 2015), and, as we have argued elsewhere, assess student learning (Hébert, Jenson, and Fong 2018). Groff, Howells, and Cranmer make clear, “game-based learning approaches need to be well planned and classrooms carefully organized to engage all students in learning and produce appropriate outcomes” (2010, 7).

In this paper we discuss our attempt to articulate a series of digital game-based practices carried out by teachers as they used a digital game in their classrooms. Specifically, we detail nine strategies—what we are calling digital game-based pedagogies—that were common in all classrooms we observed, and utilized to varying degrees of success. As most of the teachers in the study attended a professional development (PD) session, we also draw connections between the content of the PD and these pedagogical strategies. We begin with a literature review of pedagogy and professional development in relation to DGBL, then discuss the structure of the study, and last detail the digital game-based pedagogies identified from that significant qualitative work.

Related Literature: Digital Games, Pedagogy and Professional Development

When learning is reduced to knowledge transmission and a game offered as a medium for merely learning content, the role of the teacher is similarly narrowed to an intermediary, offering the game to students and stepping back in order to let learning through gameplay take place. On this view, the game and its design are a central focus, including “integrating learning objectives with[in] th[is] delivery medium” (Becker 2017, 156). Many studies of game-based learning focus on how a game is designed, with researchers either attempting to streamline best practices for designing games (Aslan and Balci 2015; Arnab et al. 2015; Alaswad and Nadolny 2015; Van Eck and Hung 2010) or discussing the design process of a specific game for use in the classroom (Tsai, Yu, and Hsiao 2012; Barab et al. 2005; Sánchez, Sáenz, and Garrido-Miranda 2010; Lester et al. 2014). We argue that simply focusing on how a game is designed is problematic as it places responsibility for student learning in the hands of designers who “may never have had direct or lived experiences of classroom teaching, [and who] are advocating on behalf of the learning and literacy offered by games without having to take into account the real and varied challenges faced by today’s diverse learners” (Nolan and McBride 2013, 597–98). It also has the potential effect of further exacerbating the divide between games and classrooms, positioning the game as a silo that operates outside of curricular decisions and pedagogical practices.

Absent from these discussions is the pivotal role of the teacher in the classroom. In fact, terms in the literature that might signal a discussion of teaching, such as instructional approaches, instructional methods, pedagogy, pedagogical approach, digital pedagogy, game-based learning techniques, and curriculum development (Charsky and Barbour 2010; Egenfeldt-Nielson, Smith, and Tosca 2016; Becker 2009; Clark 2007; Rodriguez-Hoyos and Gomes 2012; Shabalina et al. 2016) are typically used in DGBL research to refer to the design of the game and accompanying materials that support learning (e.g. quizzes, assessment guides, and other paper and pencil tasks) instead of the actions of a classroom teacher. The assumption here seems to be that games can support student learning despite the role of the teacher, and, importantly, without considerations of the larger classroom environment and curricular structures put into place for digital game-based learning. Baek, for instance, has noted that games must be “mapped into curricula for their maximum effective utilization” (2008, 667). Similarly, Raabe, Santos, Paludo, and Benitti have argued that for DGBL, “the planning of the class is the most important stage and must involve the participation [of] teachers in choosing the content that should be supported by the use of the game…according to the goals [of] learning to be achieved” (2012, 688).

Teaching and pedagogy as they relate to DGBL have been taken up in some of the literature. Nousiainen, Kangas, Rikala, and Vesisenaho discuss teacher-identified competencies around pedagogy essential to game based learning, including “curriculum-based planning”⎯understanding how games can be used within the curriculum, how students can be involved in the curricular design process, and how to “plan game-based activities for supporting students’ academic learning and broader key competencies” (2018, 90). Moving away from pedagogical strategies specifically, Marklund and Taylor outline the roles teachers shift between during DGBL, including: the “gaming tutor,” as teachers aid students with more technologically focused elements of gameplay (e.g. manipulating controls); the “authority and enforcer of educational modes of play,” as teachers monitor student progress toward learning goals and direct play when necessary; and the “subject matter anchor,” as they draw out connections between the game and course content, including calling students’ attention to certain aspects of the game or breaking down complex concepts as they pertain to the game (2015, 363–365). Similarly, Hanghoj offers a series of “next-best practices” for teachers’ use in supporting DGBL, suggesting that teachers might “set the stage” by “providing relevant game information” for students, “recognize and challenge the students’” game experience by articulating different interpretations of a game session,” and “support students in their attempts to construct, deconstruct and reconstruct relevant forms of knowledge—both in relation to the game context, curricular goals and real live phenomena” (2008, 235).

One means of helping teachers consider their role in using games to support learning in the classroom is through professional development that might focus on teaching strategies, alongside creating a classroom ecology for DGBL. And yet, much like the research on DGBL more broadly, professional development for using games in classrooms rarely addresses pedagogy. For example, Ketelhut and Schifter’s research on developing PD for DGBL outlines the types of platforms (e.g. face-to-face, online, blended) used and how they compared to one another rather than discussing the content of the PD and its connection to pedagogy (Ketelhut and Schifter 2011). And while Chee, Mehrotra, and Ong’s PD centered on a particular teaching method⎯dialogic pedagogy⎯the authors examined teacher dilemmas rather than explicit pedagogical strategies reviewed in the PD sessions. At the same time, their findings call attention to the importance of pedagogy as teachers work to shift their teaching for DGBL. They state, teachers were “mostly accustomed to subject matter exposition followed by assigning student[s] worksheets to complete,” but with the digital games, had “to work in real time with the ideas that students were contributing, based on their gameplay experiences” (2014, 429). Consequently, this required shift in pedagogy “unsettled them” (2014, 429).

There are, of course, exceptions. The Software and Information Industry Association’s report on best practices for game use in the K–12 classroom recognizes the significance of pedagogy for DGBL, arguing that teachers should receive at least a half day of PD in order to become familiar with the theoretical underpinnings of DGBL, learn about the specific game they will be using in class, obtain practical information about creating game accounts and manipulating the game mechanics, and gain a better understanding of the “roles and responsibilities of teachers and students” (2009, 25) during gameplay. And Simpson and Stansberry provide an overview of working with teachers on the “G.A.M.E.” lesson planning model, which involves various stages: taking the perspective of the game designer to better understand how and to what extent games are engaging as well as asking students to contemplate their gameplay, “reflect[ing] on the decisions made and evaluat[ing] the consequences” (2009, 182).

As this review demonstrates, there is scant empirical research related to digital game-based pedagogies, and an important and critical need for more discussions of and research on this topic. In the next section, we discuss the study, which examined teachers’ pedagogical practices for DGBL in K–12 classroom spaces and the relationship between these practices and a professional development workshop.

The Study

Timeline of the Research

The project took place over an eight-month period, during the 2015–2016 school year, with data analysis completed at the beginning of the 2016–2017 school year. While the project was initially intended to run over a single school year, a work-to-rule ban on extracurricular activities put forth by the Elementary Teachers Federation in the province delayed the start date of the project by four months. The professional development session took place February 10–11, 2016, followed by observations from February 22–May 16. Interviews overlapped with observations, with teachers whose classes were visited in February beginning interviews in early March, and ran until the end of June. Data analysis also took place synchronously, and was completed in October 2016.

The Game

Two educational games were used in this study: Sprite’s Quest: The Lost Feathers, and Sprite’s Quest: Seedling Saga, aligned with the grade seven and grade eight Ontario geography curriculum respectively. The game was designed by Le centre d’innovation pédagogique in collaboration with the Ontario Ministry of Education and selected as the focus for this study by our funding partner, the Council of Ontario Directors of Education. Both versions of Sprite’s Quest are 2D, platformer games intended to aid in the development of physical and human geography concepts. The games also have accompanying student activity guides and teacher manuals available through an online platform. While the games can be downloaded by anyone through the Apple App Store or Google Play,[1] access to the web version of the game, along with the student activity guides and teacher manuals, is granted through individual boards of education through the Ministry of Education’s e-learning Ontario site. As this article focuses on the professional development element of the project, we do not provide a detailed overview the game, the activity guide or the teacher manuals here, but have elsewhere (Hébert, Jenson, and Fong 2018). None of the teachers had used Sprite’s Quest prior to this project.

Research Question

This study sought to identify pedagogical practices that supported DGBL. We asked: What teaching practices were common to teachers observed in the study?

Participants & Professional Development

Participants were recruited by the funding partner in conjunction with participating school boards. Altogether, 34 teachers (17 female, 17 male) from 10 school boards and 25 schools across Ontario, Canada took part in the study. Sixteen of these teachers taught straight grade 7 classes, seven grade 8, and one grade 9, while a number of teachers, especially those in smaller schools, taught split classes, with one grade 6/7/8 teacher participating, one grade 6/7, and eight grade 7/8. Twenty-eight teachers attended a professional development session that occurred at a university over a two-day period; teachers were released for that time from their classrooms. The two full days were organized and run by the authors (see Appendix B for a detailed schedule of the session). The professional development consisted of three main components:

Becoming Familiar with the Games: Walkthroughs and Content

First, as noted, none of the teachers had seen or played Sprite’s Quest before, and were given time to become familiar with the two versions of the game during the PD session. Because the teachers did not have time to play either of the games in their entirety during the PD session, we produced “walkthroughs” that were reviewed during the PD session. Walkthroughs are textual and visual overviews of key elements of a game. They were made available to teachers throughout their play sessions, during lesson planning, and while teachers were using the games in their classrooms. Second, we drew attention to how the games provided geographic content. For example, we looked at how fact bubbles pop up during play, questions are presented at the beginning of each level, and background information is offered about the geographic location (e.g. the Himalayas) through which the sprite moves. Teachers were also instructed to encourage students to make note of the facts and the answers to the questions and to pay attention to the background of the games when using them to support student learning.

Exploring the Teacher Manual and the Activity Guide

Given that teacher manuals and activity guides for these games were available and in fact had been produced to support the implementation of the game in classrooms, we wanted to ensure that teachers had the time to examine them closely and to draw connections between these resources and their curriculum. To this end, we led teachers through a guided examination of the resources, reviewing the overall structure of the games as they aligned with the sections of the student activity guide and the teacher manual. We also summarized the information made available in the teacher manual and student activity guide and provided the summaries as a supplementary electronic handout.

Discussing Curricular Connections and Collaborative Lesson Planning

There were three key concepts in the games related to physical and human geography⎯place, liveability, and sustainability. The teacher manual and the PD session emphasized these, including drawing direct connections to the Ministry of Ontario grade 7 and 8 geography curriculum. Further, the PD supported collaborative lesson planning which focused on creating learning goals, success criteria, and expectations for and evaluation of students. Finally, teachers were provided time to complete a unit plan, begin constructing individual lessons for the unit, and create assessments to use during the digital game-based unit which they then shared with the whole group.

The remaining six teachers participated in the study, but did not attend the PD session. The teachers who did not participate in the PD session were selected at random. In lieu of PD, they were invited to attend a two-hour meeting at their board office. At the meeting, the teachers were introduced to the study and told how to access the teacher and student activity guides. And they were given time to play the games, but only while the researchers were speaking individually with teachers to organize some of the logistics around classroom visits.

Data Collection and Analysis

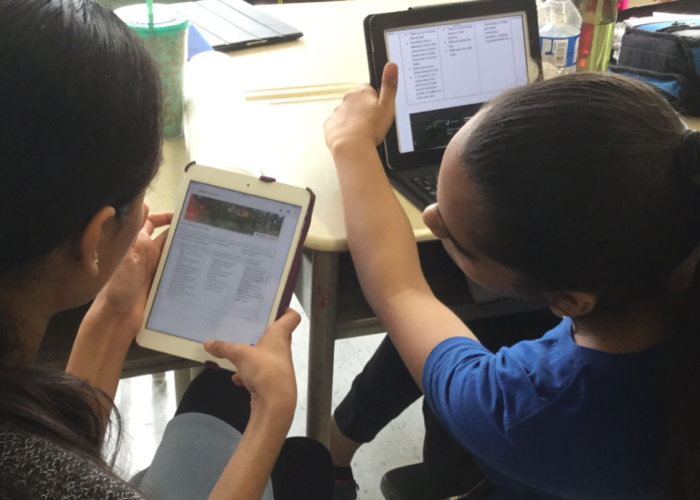

This qualitative study included observations of all teachers as they taught the DGBL unit, field notes based on observations, videos, and still photos taken during classroom visits, and interviews with teachers after the unit was completed.

Observations

Researchers visited each teacher’s classroom two to three times during the delivery of the unit, for 45 minutes to 1.5 hours per visit, documenting how they adopted the three central elements of the PD, demonstrating how familiar teachers were with the game, how the teacher and student activity guides were used, and how lessons and assessments created in the PD were taken up. Researchers also made note of the classroom environment created to support DGBL and practices within it. This included, with respect to teachers’ practices in particular, lesson content and connections to the game, how class periods were structured and facilitated by the teacher, teacher focus on student learning including asking questions of students during play and guiding their play toward learning, connections between the game and the curriculum as well as cross-curricular connections outside of geography, and teacher knowledge and understanding of the game. For student activities in the classroom, our observations centered on time students spent on/off task, if, how, and in what ways students were engaged with the game, and conversations among students about the game and/or geography more broadly. Detailed field notes were taken along with videos, audio recordings, and still photos. Field notes were analyzed thematically using NVivo (Clarke and Braun 2017; Nowell, Norris, White, and Moules 2017).

Interviews

Teachers were interviewed at the completion of the study. They were asked to provide information about their curriculum, including lesson sequencing, assessments, time required to plan, and decisions they made about whether or not to use any of the game’s resources located in the activity guides; gameplay, including student experiences and learning, such as interactions with one another, individual students who excelled or struggled, whether students were making connections between the game world and the world outside of the game, and how the room was organized for gameplay; and the PD sessions, including whether they would participate in future PD, feedback on the sessions, and how the PD sessions impacted their use of the game and whether or not they would use it in the future. Interviews ranged in length from 25 to 80 minutes. Common themes were identified that would aid the researchers in their understanding of teachers’ experiences. Interviews were analyzed, thematically, using NVivo. (See Appendix A)

The next section extrapolates from the data and analysis described briefly here and offers a framework for digital game-based pedagogies, based on our nearly 100 hours of classroom observations and over 34 hours of interviews with teachers. The intent is to demonstrate, based on evidence gathered, a pedagogical framework that can be taken up and used by others who might expand on and modify it to best suit divergent contexts.

Digital Game-Based Pedagogies

Having analyzed the data, it was possible to identify with some clarity, approaches to teaching with games⎯or digital game-based pedagogies⎯particularly supportive of DGBL. While the content of the PD and the skills teachers developed within it, including familiarity with the game, their use of activities and the teacher guide, and their adoption of the lessons planned during the PD, was an initial point of interest during observations, what became apparent was how teachers used this knowledge to shape their pedagogical practice. A teacher, sitting off to the side during gameplay, might have demonstrated knowledge of Sprite’s Quest by answering a student’s question when approached, but that teaching practice was less meaningful than that of a teacher who illustrated their knowledge by circulating around the classroom during gameplay, asking questions, directing students’ attention to elements of the game, and providing practical tips on how to navigate specific levels. That teachers used activities from the teacher guide was important, but among those who did, what type of learning activity was selected and how the lesson was structured around that activity told us more about impactful DGBL than simply whether or not an activity was used. And the quality of the lesson and assessment content and the pace of the unit created around the game impacted the nature of the DGBL experience. Consequently, through our observations, we began to identify a set of practices⎯pedagogical strategies that best supported DGBL in the classroom.

What follows are details of these nine digital game-based pedagogies, grouped according to three general categories: gameplay, lesson planning and delivery, and framing technology and the game.

| Category | Pedagogical Strategies |

|---|---|

| Gameplay | Teacher knowledge of and engagement with the game during gameplay |

| Gameplay | Focused and purposeful gameplay |

| Gameplay | Collaborative gameplay |

| Lesson Planning and Delivery | Meaningful learning activities |

| Lesson Planning and Delivery | Cohesive curricular design: Structured lessons |

| Lesson Planning and Delivery | Appropriate lesson pacing and clear expectations |

| Framing Technology and the Game | Technological platforms not a point of focus |

| Framing Technology and the Game | Game positioned as a text to be read |

| Framing Technology and the Game | Connections to prior learning and to the world beyond the game environment |

Gameplay

1. Teacher knowledge of and engagement with the game during gameplay

Teachers demonstrated knowledge of the game in group discussions and one-on-one conversations with students. They regularly spoke of their own experiences during gameplay, including aiding students in challenges with overcoming obstacles in game. Teachers talked with students about how to focus play on the learning task at hand, including what to pay attention to during gameplay. Teachers were also engaged with gameplay. For example, they circulated to ask students questions, direct student attention to various facets of the game, and connect the game to the learning activity.

2. Focused and purposeful gameplay

During gameplay, teachers directed student focus to a specific learning activity. In this respect, gameplay was always purposeful, targeted at the completion of a particular learning activity. For instance, students might play a few levels of the game, directed by the teacher to pay attention to the climate, or to compare regions with respect to vegetation.

3. Collaborative gameplay

Teachers facilitated whole class discussions that focused gameplay, and connected game content to the curriculum more broadly. The teacher encouraged students to play together or to complete learning activities collaboratively. For example, one teacher asked students to work in groups to respond to the question of whether they would like to live in China (one of the locations featured in Sprite’s Quest) based on their experiences playing the game, and another, to respond to discussion questions.

Lesson Planning and Delivery

4. Meaningful learning activities

Teachers assigned learning activities that involved the application of higher-order skills such as analysis or creation. For instance, one teacher asked students to produce a travel video for a specific geographic region in the game, another, to debate the merits of restricting mountain access in a particular region, and a third teacher, to construct an argumentative paragraph about whether hotels should be permitted to privatize beaches. If students were asked to jot down facts or information obtained through gameplay, it was in support of an additional, higher-order learning activity. In some instances, these materials were extracted from the student activity guide, while in others, they were created by teachers.

5. Cohesive curricular design: Structured lessons

Gameplay was integrated into the curriculum by the teacher. An introductory lesson that rooted play to learning preceded gameplay and a learning activity followed play. For example, one teacher facilitated a lesson on garbage disposal practices around the world, before asking students to play a level of the game that focused on garbage disposal in a specific region. The teacher also created a follow up activity wherein students composed a letter about disposal to a government official in the region of the world.

6. Appropriate lesson pacing and clear expectations

Teachers provided students with concrete time frames for the completion of tasks. Often, periods were structured in such a way that multiple activities were to take place. For instance, a 5-minute introductory activity might be followed by 20 minutes of structured and targeted gameplay, with the final 15 minutes of the period allotted to small group discussions around a learning activity. Teachers regularly reminded students of time to complete tasks.

Framing Technology and the Game

7. Technological platforms not a point of focus

Some teachers chose to use the electronic activity guide or board-based platforms for the completion of learning activities. In these cases, the activities, rather than the technology, remained the point of focus. When technology malfunctioned (e.g. students had difficulty logging into the board site or material was not uploading to the activity guide), teachers continued to place emphasis on the significance of the learning taking place, asking students to share resources to complete the activity, or directing them to an alternative materials such as pen, pencil, and paper. In so doing, teachers maintained the pace of the lesson.

8. Game positioned as a text to be read

Teachers framed the game as a text that students could reference in support of their learning in the classroom, extending DGBL beyond learning during play. To do so, they facilitated connections between the game and other material such as videos viewed in class, textbooks, class discussions, so on. For example, to respond to an activity question, one teacher guided students in using material learned both in the game and the textbook to support their answers.

9. Connections to prior learning and to the world beyond the game environment

Teachers connected gameplay to prior learning and to material examined outside of the game context. They reminded students of learning during previous play sessions and of subject-specific and cross-curricular learning during class discussions. For example, one teacher connected a level of the game that explored waste management to a recently completed assignment examining the Great Pacific Garbage Patch. Another teacher connected learning around a water-locked region in the game to a historical lesson about expedition. Teachers also drew parallels between game locations and the local community. For instance, a teacher engaged the class in a heated debate, comparing garbage collection and pollution in certain areas of the game to garbage collection in the school and pollution in the local city and surrounding area.

Possible Impact of Professional Development on Teachers’ Digital Game-Based Pedagogies

What we offer in the previous section are descriptions of exemplary pedagogical practices that support DGBL. Not all of the teachers who participated in the study engaged with these practices in the way described above. In fact, 26% of the teachers were what we would label as highly successful in engaging in the digital game-based pedagogies outlined in this article. Another 29% were somewhat successful, at times adopting some of these pedagogical strategies and not others, creating meaningful learning activities and highly structured lessons that were adequately paced, for example, but then failing to connect the game to prior learning and the world beyond the game environment, not requiring students to collaborate with one another, and not positioning the game as text to be read. And the final 45% were labeled as unsuccessful, adopting DGBL in the classroom in a more haphazard manner, offering pedagogical practices that did not reflect the digital game-based pedagogies detailed in this text. (See Figure 1)

Given the significance of the digital game-based pedagogies and the extent to which these practices were common across the classrooms we visited, we wanted to determine if there was any connection between these practices and whether or not teachers had received PD.

In the category of strong alignment, 29% of teachers who received PD employed pedagogical strategies that matched that criterion, compared to 17% of the teachers who did not receive PD. For moderate alignment, 32% of the teachers who received PD were grouped in this category compared to 17% of the teachers who did not receive PD. And finally, 39% of the teachers who received PD were weakly aligned with these practices compared to 66% of the teachers who did not receive PD. (See Figures 2 and 3)

Conclusion

The limitations to this research are: 1) it was not possible to spend more than three to four hours in each classroom given the geographical scope of the project and the number of participants, however, the study could have benefited from observation of the entire unit as it was delivered; and 2) we did not have a powerful enough number of participants to generate meaningful quantitative comparative data, and certainly that could be of interest in future. There is very rich data, of course, that due to word limits we were not able to detail further. However, we hope to have highlighted the importance of pedagogy for creating environments conducive to DGBL, calling attention to best practices around structuring and conceptualizing gameplay, planning, and delivering content, and framing both technology and a game. While these pedagogical strategies were not the focus of our professional development session, it is clear from our observations of participants’ teaching after the PD that the session impacted their teaching as it pertained to these best practices. More broadly and considering gaps in the practices of teachers involved in our study, these digital game-based pedagogies provide a framework for better understanding not only what good teaching with games looks like but also areas where teachers require additional support.

We have argued that very little research on digital game-based learning examines teacher pedagogies and that even fewer studies of professional development for teachers on DGBL either focus on pedagogy or study the impact of professional development on teacher practice. By observing thirty-four teachers in their classrooms after providing a professional development session, we identified a common set of digital game-based pedagogies that supported digital game-based learning. While our professional development session did not explicitly address these pedagogical strategies, either through discussion or modeling, we did recognize areas in which the content of our professional development session overlapped with some of the strategies teachers employed. This research can inform future PD that attempt to better understand the impact of modeling and discussing these digital game-based pedagogies within professional development sessions. This work, importantly, offers a potential framework for providing teachers with the practical skills required to support students in digital game-based learning in classroom spaces. It also signals a need for future studies that focus specifically on pedagogies that best support DGBL.