But in Spring 2020, as campuses around the globe went fully remote, online, and often asynchronous in response to the COVID-19 pandemic, many of us met the limits of these strategies. Tactics like situated collaboration, shared machinery, or lab space became impossible to employ in the face of this sudden temporal obsolescence, as our working, updated technologies became functionally “obsolete” within the time-boundedness of that moment. In my own case, the effects of this moment were most acutely felt in my Introduction to the Digital Humanities class, where the move to remote teaching coincided with our electronic literature unit, a unit where the combined effects of technological obsolescence and proprietary platforms already necessitate adopting strategies so that students may equitably engage the primary texts. For me, primary among these strategies are sharing machinery to experience texts together, guiding students through emulator or software installation as appropriate, and adopting collaborative critical making pedagogies to promote material engagement with texts. In Spring 2020, though, as my class moved to a remote, asynchronous model, each of these strategies was directly challenged.

I felt this challenge most keenly in trying to teach Brian Kim Stefans’s Dreamlife of Letters (2000) and Amaranth Borsuk and Brad Bouses’s Between Page and Screen (2012), two works that, though archived and freely available via the Electronic Literature Collection and therefore ideal for accessing from any device with an internet connection, were built in Flash, so require the Flash Player plug-in to run. Since Adobe’s announcement in 2017 that they would stop supporting Flash Player by the end of 2020, fewer and fewer students enter my class with machines prepared to run Flash-based works, either because they have not installed the plugin, or because they are on mobile devices which have never been compatible with Flash—a point that Anastasia Salter and John Murray (2014) argue, has largely contributed to Adobe’s decision. The COVID-19 move to remote, online instruction effectively meant that students with compatible hardware had to navigate the plug-in installation on their own (a process requiring a critical level of digital literacy), while those without compatible hardware were unable to access these works except as images or recordings. In the Spring 2020 teaching environment, then, the temporal obsolescence brought on by COVID-19 acted as a harbinger for the eventual inaccessibility of e-literary work that requires Flash to run.

The problem space that I occupy here is this: teaching electronic literature in the undergraduate classroom, as its digital material substrates move ever closer to obsolescence, an impossible race against time that speeds up each year as students enter my classroom with machines increasingly less compatible with older software and platforms. Specifically, I focus on the challenge of teaching e-literature in the undergraduate, digital humanities classroom, while facing the loss of Flash and its attendant digital poetics—a focus informed by my own disciplinary expertise, but through which I offer pedagogical responses and strategies that are useful beyond this scope.

Central to my argument, here, is that teaching e-literary practice and poetics in the face of technological obsolescence is most effective through an approach that interweaves practice-based critical making of e-literary texts, with the more traditional humanities practices of reading, playing, or otherwise consuming texts. Critical making pedagogy, however, is not without its critiques, particularly as it may promote inequitable classroom environments. For this reason, I start with a discussion of critical making as feminist, digital humanities pedagogy. From there, I turn more explicitly to the immediate problem at hand: the impending loss of Flash. I address this problem, first, by highlighting some of the work already being done to maintain access to e-literary works built in Flash, and second by pointing to some of the limits of these projects, as they prioritize maintaining Flash-based works for readerly consumption, even though the loss of Flash is also the loss of an amateur-friendly, low-stakes coding environment for “writing” digital texts. Acknowledging this dual loss brings me to a short case study of teaching with Stepworks, a contemporary, web-based platform that is ideal for creating interactive, digital texts, with poetics similar to those created in Flash. As such, it is a platform through which we can effectively use critical making pedagogies to combat the technological obsolescence of Flash. I close by briefly expanding from this case study, to reflect on some of the ways critical making pedagogies may help combat the loss of e-literary praxes beyond those indicative of and popularized by Flash.

Critical Making Pedagogy As DH Feminist Praxis

Critical making is a mode of humanities work that merges theory with practice by engaging the ways physical, hands-on practices like making, doing, tinkering, experimenting, or creating constitute forms of thinking, learning, analysis, or critique. Strongly associated with the digital humanities given the field’s own relationship to intellectual practices like coding and fabrication, critical making methodologically argues that intellectual activity can take forms other than writing, as coding, tinkering, building, or fabricating represent more than just “rote” or “automatic” technical work (Endres 2017). As critical making asserts, such physical practices constitute complex forms of intellectual activity. In other words, it takes to task what Bill Endres calls “an accident of available technologies” that has proffered writing its status as “the gold standard for measuring scholarly production” (2017).

Critical making scholarship can, thus, take a number of forms, as Jentery Sayers’s (ed.) Making Things and Drawing Boundaries showcases (2017). For example, as a challenge to and reflection on the invasiveness of contemporary personal devices, Allison Burtch and Eric Rosenthal offer Mic Jammer, a device that transmits ultrasonic signals that, when pointed towards a smartphone, “de-sense that phone’s microphone,” to “give people the confidence to know that their smartphones are non-invasively muted” (Burtch and Rosenthal 2017). Meanwhile, Nina Belojevic’s Glitch Console, a hacked or “circuit-bent” Nintendo Entertainment System, “links the consumer culture of video game platforms to issues of labor, exploitation, and the environment” through a play-experience riddled with glitches (Belojevic 2017). Moving away from fabrication, Anne Balsamo, Dale MacDonald, and Jon Winet’s AIDS Quilt Touch (AQT) Virtual Quilt Browser is a kind of preservation-through-remediation project that provides users with opportunities to virtually interact with the AIDS Memorial Quilt (Balsamo, MacDonald, and Winet 2017). Finally, Kim A. Brillante Knight’s Fashioning Circuits disrupts our cultural assumptions about digital media and technology, particularly as they are informed by gendered hierarchy; this project uses “wearable media as a lens to consider the social and cultural valences of bodies and identities in relation to fashion, technology, labor practices, and craft and maker cultures” (Knight 2017).

Each of these examples of critical making projects highlight the ways critical making disrupts the primacy of writing in intellectual activities. However they are also entangled in one of the most frequently lobbed and not insignificant critiques of critical making as a form of Digital Humanities (DH) praxis: that it reinforces the exclusionary position that DH is only for practitioners who code, make, or build. This connection is made explicit by Endres, as he frames his argument that building is a form of literacy through a mild defense of Steven Ramsay’s 2011 MLA conference presentation, which argued that building was fundamental to the Digital Humanities. Ramsay’s presentation was widely critiqued for being exclusionary (Endres 2017), as it promoted a form of gatekeeping in the digital humanities that, in turn, reinforces traditional academic hierarchies of gender, race, class, and tenure-status. As feminist DH in particular has shown, arguments that “to do DH, you must (also) code or build,” imagine a scholar who, in the first place, has had the emotional, intellectual, financial, and temporal means to acquire skillsets that are not part of traditional humanities education, and who, in the second place, has the institutional protection against precarity to produce work in modes outside “the gold standard” of writing (Endres 2017).

Critical making as DH praxis then, finds itself in a complicated bind: on the one hand, it effectively challenges academic hierarchies and scholarly traditions that equate writing with intellectual work; on the other hand, as it replaces writing with practices (akin to coding) like fabrication, building, or simply “making,”[1] it potentially reinforces the exclusionary logic that makes coding the price of entry into the digital humanities “big tent” (Svensson 2012).[2] Endres, however, raises an important point that this critique largely fails to account for: that “building has been [and continues to be] generally excluded from tenure and promotion guidelines in the humanities” (Endres 2017). That is, while we should perhaps not take DH completely off the hook for the field’s internalized exclusivity and the ways critical making praxis may be commandeered in service of this exclusivity, examining academic institutions more broadly, and of which DH is only a part, reveals that writing and its attendant systems of peer review and impact factors remains the exclusionary technology, gate-keeping from within.

To offer a single point of “anec-data:”[3] in my own scholarly upbringing in the digital humanities, I have regularly been advised by more senior scholars in the field (particularly other white women and women of color) to only take on coding, programming, building, or other making projects that I can also support with a traditional written, peer-reviewed article—advice that responds explicitly to writing’s position of primacy as a metric of academic research output, and implicitly to academia’s general valuation of research above activities like teaching, mentorship, or service. It is also worth noting that this advice was regularly offered with the clear-eyed reminder that, as valuable as critical making work is, ultimately the farther the scholar or practitioner appears from the cisgendered, heterosexual, able-bodied, white, male default, the more likely it is that their critical making work will be challenged when it comes to issues of tenure, promotion, or job competitiveness. While a single point of anec-data hardly indicates a pattern, the wider system of academic value that I sketch here is well-known and well-documented. It would be a disservice, then, not to acknowledge the space that traditional, peer-reviewed, academic writing occupies within this system.

Following Endres, my own experience, and systemic patterns across academia, I would argue that even though critical making can promote exclusionary practices in the digital humanities, the work that this methodological intervention does to disrupt technological hierarchies and exclusionary systems—including and especially, writing—outweighs the work that it may do to reinforce other hierarchies and systems. Indeed, I would go one step further to add my voice to existing arguments, implicit in the projects cited above and explicit throughout Jacqueline Wernimont and Elizabeth Losh’s edited collection, Bodies of Information: Intersectional Feminism and the Digital Humanities (2018), that critical making is feminist praxis, not least for the ways it contributes to feminism’s long-standing project of disrupting, challenging, and even breaking language and writing.[4]

The efficacy of critical making’s feminist intervention becomes even more evident, and I would argue, powerful, when it enters the classroom as undergraduate pedagogy. Following critical making scholarship, critical making pedagogy similarly disrupts the primacy of written text for intellectual work. Students are invited to demonstrate their learning through means other than a written paper, in a student learning assessment model that aligns with other progressive, student-centered practices like the un-essay.[5] Because research in digital and progressive pedagogy highlights the ways things like un-essays are beneficial to student learning, I will focus here on pointing to particular ways critical making pedagogy in the undergraduate digital humanities classroom operates as feminist praxis for disrupting heteropatriarchal assumptions about technology. For this, I will pull primarily from feminist principles of and about technology, as outlined by FemTechNet and Lauren Klein and Catherine D’Igniazio’s Data Feminism, and from my experiences teaching undergraduate digital humanities classes through and with critical making pedagogies.

In their White Paper on Transforming Higher Education with Distributed Open Collaborative Courses, FemTechNet unequivocally states “effective pedagogy reflects feminist principles” (FemTechNet White Paper Committee 2013), and the first and perhaps most consistent place that critical making pedagogies respond to this charge are in the ways that critical making makes labor visible, a value of intersectional feminism broadly, and data feminism specifically (D’Ignazio and Klein 2020). Issues of invisible labor have long been central to feminist projects, so it is no surprise that this would also be a central concern in response to Silicon Valley’s techno-libertarian ethos that blackboxes digital technologies as “labor free” for both end-users and tech workers. When students participate in critical making projects that relate to digital technologies—projects that may range from building a website or programming an interactive game, to fabricating through 3D printing or physical circuitry—they are forced to confront the labor behind (and rendered literally invisible in) software and hardware. This confrontation typically manifests as frustration, often felt in and expressed through their bodies, necessitating an engagement with affect, emotion, care, and often, collaboration in this work—all feminist technologies directly cited in both FemTechNet’s manifesto (FemTechNet 2012), and as core principles of data feminism (D’Ignazio and Klein 2020). Similarly, as students learn methods and practices for their critical making projects, they inevitably find themselves facing messiness, chaos, even fragments of broken things, and only occasionally is this “ordered” or “cleaned” by the time they submit their final deliverable. Besides recalling FemTechNet’s argument that “making a mess… [is a] feminist technolog[y]” (FemTechNet 2012), the physical and intellectual messiness of critical making pedagogy also requires a shift in values away from the “deliverable” output, and towards the process of making, building, or learning itself. At times, this shift in value toward process can manifest as a celebration of play, as the tinkering, experimentation, chaos, and messiness of critical making transform into a play-space. While I am hesitant to argue too forcefully for the playfulness of critical making in the classroom (not least for the ways play is inequitably distributed in academic and technological systems), Shira Chess has recently and compellingly argued for the need to recalibrate play as a feminist technology, so I name it here as an additional, potential effect of critical making pedagogy (Chess 2020). Whether it transforms to play or not, however, the shift in value away from a deliverable output is always a powerful disruption of the masculinist, capitalist narratives of “progress” and “value” that undergird technological, academic work.

These are, of course, also the narratives primarily responsible for the profitability of obsolescence, which brings us back to my primary thesis and central focus: the efficacy of adopting critical making pedagogies to counter the effects of technological obsolescence in electronic literature. Because technological obsolescence is a core concern of electronic literature, it will be worth spending some time and space to examine this relationship more deeply, and address some of the ways the field is already at work countering loss-through-obsolescence. Indeed, some of this work already anticipates possibilities for critical making to counter this loss.

Preservation and E-lit

As stated technological obsolescence is the phenomenon whereby technologies become outdated (obsolete) as they are updated. Historically, technological obsolescence has occurred in concert with developments in computing technologies that have radically altered what computers can do (eg: moves from primarily text to graphics processing power) or how they are used (eg: with the advent of programming languages, or the development of the graphical user interface). However, as digital technologies have developed into the lucrative consumer market of today, this phenomenon has become driven more heavily by capitalistic gains through consumer behaviors. Consider, for instance, iOS updates that no longer work on older iphone models, or new hardware models that do not fit old plugs, USB ports, or headphones. In each case, updates to the technology force consumers to purchase newer models, even if their old ones are otherwise functioning properly.

In the field of electronic literature, obsolescence requires attention from two perspectives: the readerly and the writerly. From the former, obsolescence threatens future access to e-literary texts, so the field must regularly engage with preservation strategies; from the latter, it requires that that the field regularly engage with new technologies for “writing” e-literary texts, as new platforms and programs both result from and in others’ obsolescence. E-lit, thus, occupies both sides of the obsolescence coin: on the one side, holding the outdated to ensure preservation and access, and on the other, embracing the updated, as new platforms, programs, and hardware prompt the field’s innovation. Much of the field’s focus is on combating obsolescence through attention to outdated (or, as in the case of Flash, soon-to-be-outdated) platforms, and maintaining these works for future audiences. However, this attention only weakly (if at all) accounts for the flipside of the writerly, where obsolescence also threatens loss of craft or practice in e-literary creation; this is where, I argue critical making as e-literary pedagogy is especially productive as a counter-force to loss. First though, it will be worth looking more closely at the ways obsolescence and e-literature are integrated with one another.

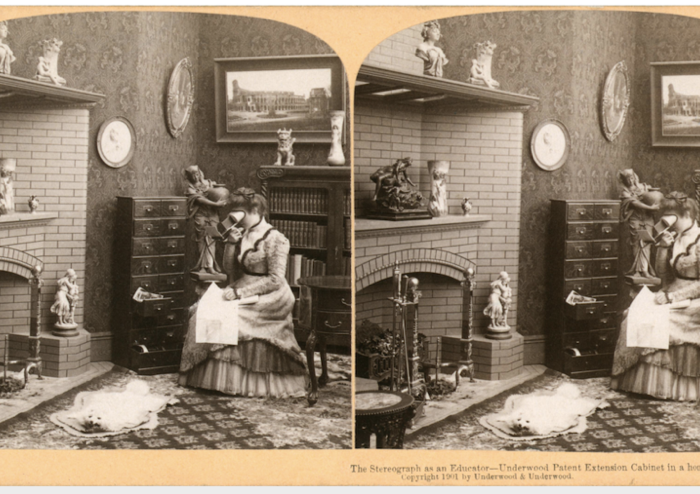

Maintaining access to obsolete e-literature is, and has been, a central concern of the field in large part because the field’s own history “is inextricably tied to the history of computing, networking, and their social adoption” (Flores 2019). A brief overview of e-literature’s “generations” effectively illustrates this relationship. The first generation of e-lit is primarily pre-web, text-heavy works developed between 1952 and 1995—dates that span the commercial availability of computing devices and the development of language-based coding, to the advent and adoption of the web (Flores 2019). Spanning over 40 years, this period also includes within it, the period 1980–1995 which is “dominated by stand-alone narrative hypertexts,” many of which “will no longer play on contemporary operating systems” (Hayles and House 2020, my italics). The second generation, beginning in 1995, spans the rise of personal, home computing and the web, and is characterized by web-based, interactive, and multimedia works, many of which are developed in Flash (Flores 2019). In 2005, the web of 1995 shifted into the platform-based, social “web 2.0” that we recognize and use today. Leonardo Flores uses this year to mark the advent of what he argues for as the third generation of e-lit, which “accounts for a massive scale of born digital work produced by and for contemporary audiences for whom digital media has become naturalized” (Flores 2019). In the Electronic Literature Organization (ELO)’s Teaching Electronic Literature initiative, N. Katherine Hayles and Ryan House characterize third generation e-lit, specifically, as “works written for cell phones” in contrast to “web works displayed on tablets and computer screens” (Hayles and House 2020).

Though Flores includes activities like storytelling through gifs and poetics of memes in his characterization of third generation e-lit, framing this moment through cell phones is helpful for thinking about the centrality of computing development and its attendant obsolescence to the field. In the first place, pointing to work developed for cell phones immediately brings to mind challenges of access due to Apple’s and Android’s competitive app markets. Here, there is on the one hand, the challenge of apps developed for only one of these hardware-based platforms, so inaccessible by the other; on the other hand, there are the continuous operating system updates that in turn, require continuous app updates which regularly results in apps seemingly, and sometimes literally, becoming obsolete overnight. In the second place, the 1995–2015 generation of “web works displayed on tablets and computer screens” is, as noted, a generation characterized by the rising ubiquity of Flash—a platform that was central to both user-generated webtexts, and e-literary practice of this time (Salter and Murray 2014). As noted, Flash-based works are currently facing their own impending obsolescence due to Adobe’s removal of support, a decision that Salter and Murray argue results directly from the rise of cell phones and/as smartphones which do not support Flash. Thus, cell phones and “works created for cell phones” once again demonstrates the intricate relationship between computing history, technological development, and e-literature.

As this brief history demonstrates, the challenge of ensuring access to e-lit in the face of technological obsolescence is absolutely integral to the field, as it ultimately ensures that new generations of e-lit scholars can access primary texts. Indeed, it is a central concern behind one of the field’s most visible projects: the Electronic Literature Collection (ELC). As of this writing, the ELC is made up of three curated volumes of electronic literature, freely available on the web and regularly maintained by the ELO. In addition to expected content like work descriptions and author’s/artist’s statements, each work indexed in the ELC is accompanied by notes for access; these may include links, files for download, required software and hardware, or emulators as appropriate. The ELC, thus, operates as both an invaluable resource for preserving access to e-lit in general, and for ensuring access to e-lit texts for teaching undergraduates. Most of the work in the ELC is presented either in its original experiential, playable form, or as recorded images or videos when experiencing the work directly is untenable for some reason (as in, for instance, locative texts which require their user to be in specific locations to access the text). However, some of the work is presented with materials that encourage a critical making approach—a move in direct conversation with my argument that critical making plays an important pedagogical role for experiencing and even preserving e-literary practice, even and especially if the text itself cannot be experienced or practiced directly. Borsuk and Bouse’s Between Page and Screen, an augmented reality work that requires both a physical, artist’s book of 2D barcodes specifically designed for the project, and a Flash-based web app that enables the computer’s camera to “read” these barcodes, offers a particularly strong example of this.

Between Page and Screen is indexed in the 3rd volume of the ELC. In addition to the standard information about the work and its authors, the entry’s “Begin” button contains an option to “DIY Physical Book,” which will take the user to a page on the work’s website that offers users access to 1) a web-based tool called “Epistles” for writing their own text and linking it to a particular bar code; and 2) a guide for printing and binding their own small chapbook of bar codes, which can then be held up and read through the project’s camera app. In this way, users who may be unable to access the physical book of barcodes that power Between Page and Screen are still offered an experiential, material engagement with the text through their own critical making practices. Engaging the text in this way allows users not only to physically experience the kinetics and aesthetics of the augmented reality text, but also to engage the materiality and interaction poetics at the heart of the piece—precisely those poetics that are lost when the only available access to a text is a recording to be consumed. At the same time, engaging the text through the critically made chapbook prompts a material confrontation between the analog and the digital as complementary, even intimate, information technologies. Of course, in this case it is (perhaps) ironically not the anticipated analog technology that is least accessible; rather it is the digital complement, the Flash-based program that allows the camera to read the analog bar codes, that is soon to be inaccessible.

Complementing the Electronic Literature Collections are the preservation efforts underway at media archeology labs around the country, most notably the Electronic Literature Lab (ELL) directed by Dene Grigar at Washington State University, Vancouver. Currently, the ELL is working on preserving Flash-based works of electronic literature, through the NEH-sponsored Afterflash project. Afterflash will preserve 447 works through a combination process where researchers:

1) preserve the works with Webrecorder, developed by Rhizome, that emulates the browser for which the works were published, 2) make the works accessible with newly generated URLs with six points of access, and 3) document the metadata of these works in various scholarly databases so that information about them is available to scholars (Slocum et. al. 2019).

Without a doubt, this is an exceptional project of preservation for ensuring some kind of access to Flash-based works of electronic literature, even after Adobe ends their support and maintenance of the software. In particular, the use of Webrecorder to capture the works means that the preservation will not just be a recorded “walk-through” of the text, but will capture the interactivity—an important poetic of this moment in e-literary practice.

As exceptional as this preservation project is, however, it is focused entirely (and not incorrectly) on preserving works so that they may be experienced by future readers, who do not have access to Flash. But what of preserving Flash as a program particularly suited for making interactive, multimedia webtexts? As Salter and Murray argue, a major part of the platform’s success and influence on turn-of-the-century web aesthetics, web arts, and electronic literature has to do with its low barrier-to-entry for creating interactive, multimedia works, even for users who were not coders, or who were new to programming (Salter and Murray 2014). Pedagogically speaking, the amateur focus of Flash also meant that it was particularly well-suited for teaching digital poetics through critical making. In the first place, it upheld the work of feminist digital humanities to disrupt and resist the primacy of original, complex, codework to the digital humanities and (more specifically) electronic literatures. In this way, it could operate as an ideal tool for feminist critical making pedagogies by, both promoting alternatives to writing for intellectual work, and by resisting the exclusivity behind prioritizing original, complex codework. In the second place, it allowed students to tinker with the particularities and subtleties of digital poetics—things like interaction, animation, kinetics, and visual / spatial design—without getting overwhelmed by the complexities and specifications of code. As the end of 2020 and Adobe’s support for Flash looms large, the question then becomes all the more urgent: if critical making offers an effective, feminist pedagogical model for teaching electronic literatures in the face of technological obsolescence, how can we maintain these practices in our undergraduate teaching in a post-Flash world?

Case Study: Stepworks

In response to this question, I propose: Stepworks. Created by Erik Loyer in 2017, Stepworks is a web-based platform for creating single-touch interactive texts that centers around the primary metaphor of staged, musical performance, an appropriate metaphor that resonates with traditions of e-literature and e-poetry that similarly conceptualize these texts in terms of performance. Stepworks performances are powered by the Stepwise XML, also developed by Loyer, but the platform does not require creators to work directly in the XML to make interactive texts. Instead, the program in its current iteration interfaces directly with Google Sheets—a point that, while positive for things like ease of use and supporting collaboration (features I discuss more fully in what follows), does introduce a problematic reliance on Google’s corporate decisions to maintain access to and workability of Stepworks and its texts. In the Stepworks spreadsheet, each column is a “character,” named across the first row, while the cells in each subsequent row contain what the character performs—the text, image, code, sound, or other content associated with that character. Though characters are often named entities that speak in text, they can also be things like instructions for use, metadata about the piece, musical instruments that will perform sound and pitch, or a “pulse,” a special character that defines a rhythm for the textual performance. Finally, each cell contains the content that will be performed with each single-click interaction, and this content will be performed in the order that it appears down the rows of the spreadsheet. Students can, therefore, easily and quickly experiment with different effects of single-click interactive texts, as they perform at the syllable, word, or even phrase level, just by putting that much content into the cell.

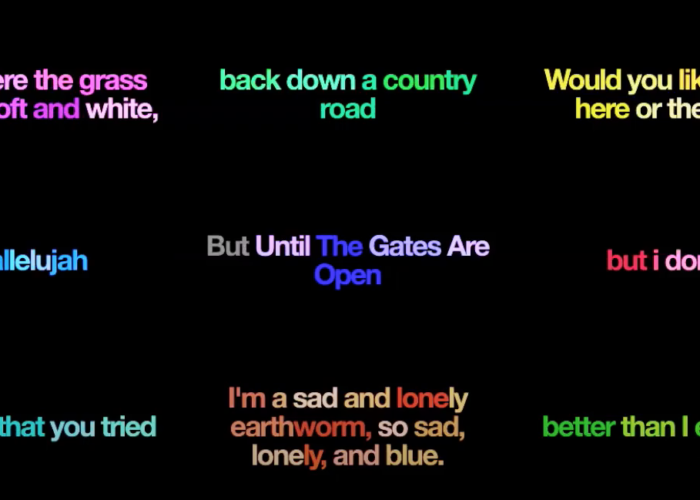

Figures 1, 2, and 3 illustrate some of these effects. Figure 1, a gif of a Stepworks text where each line of Abbott and Costello’s “Who’s on First” occupies each cell, showcases the effects of performing full lines of text with each interaction.

Figure 2, a gif from a Stepworks text based on Lin-Manuel Miranda’s Hamilton, showcases the effects of breaking up each cell by syllable and allowing the player to perform the song at their own pace.

Finally, Figure 3 is taken from Unlimited Greatness, a text that remediates Nike’s ad featuring the story of Serena Williams as single words. In this text, the effects of filling each cell with a single word are on display.

Pedagogically speaking, this range of illustrative content allows students to quickly grasp the different effects of manipulating textual performance in an interactive piece. At the same time, the ease of working in the spreadsheet offers them an effective place from which to experiment in their own work. For example, reflecting on the process of creating a Stepworks performance based on a favorite song, one student describes placing words purposefully into the spreadsheet, breaking up the entries by word and eventually by syllable, rather than by line:

I made them [the words] pop up one by one instead of the words popping up all together using the [&] symbol. I also had words that had multiple syllables in the song, so I broke those up to how they fit in the song. So, if the song had a 2-syllable word, then I broke it up into 2 beats.

Although the spreadsheet is not named explicitly, the student describes a creative process of purposefully engaging with the different effects of syllables, words, and lines, opting for a word- and syllable-based division in the spreadsheet to affect how the song’s remediation is performed (see Figure 4, the middle column of the top row).

Besides offering the spreadsheet as an accessible space in which to build interactive texts, Stepworks comes equipped with pre-set “stages” on which to perform those texts. Currently there are seven stages available for a Stepworks performance, and each of them offers a different look and feel for the text. For instance, the Hamilton piece discussed above (Figure 2) is performed on Vocal Grid, a stage which assigns each character a specific color and position on a grid that will grow to accommodate the addition of more characters; Who’s On First, by contrast, is performed on Layer Cake, a stage that displays character dialogue in stacked rows. As the stages offer a default set design for font, color, textual behavior, and background, they (like the spreadsheets) reduce the barrier-to-entry for creating and experimenting with kinetic, e-poetic works. Once a text is built in the spreadsheet and the spreadsheet published to the web, it may be directly loaded into any stage and performed; a simple page reload in the browser will update the performance with any changes to the spreadsheet, thereby supporting an iterative design process. The user-facing stage and design-facing spreadsheet are integrated with one another so that students may tinker, fiddle, experiment, and learn, moving between the perspective of the audience member and that of the designer.

In my own classes, it is at this stage of the process that additional, less tangible benefits of critical making pedagogy have come to light, especially in my most common Stepworks assignment: challenging students to remediate a favorite song or poem into a Stepworks performance. When students reach the stage of loading and reloading their spreadsheets into different stages in Stepworks, they also often begin exploring different performance effects for their remediated texts, regularly going beyond any requirements of the assignment to learn more about working with and in Stepworks to achieve the effects they want. Of this creative experience, one student writes:

In order to accurately portray the message of the song, I had to take time and sing the song to myself multiple times to figure out the best way to group the words together to appear on screen. Once this was completed, I had an issue where words would appear out of order because I didn’t fully understand the +1 (or any number for that matter) after a word. I thought that this meant to just add extra time to the duration of the word or syllable. I later figured out that it acted as a timeline of when to appear rather than the duration of the word’s appearance. Once I figured this out, it then came down to figuring out the best timing of the words appearing on each screen to match the original song.

Here the student’s reflection indicates a few important things: first the reflection articulates the student’s own iterative design process, as they move between the designer’s and audience’s experiential positions to create the “best timing of the words” performed on screen. This same movement guides the student towards figuring out some more advanced Stepworks syntax: working with a “Pulse” character to effect a tempo or rhythm to the piece by delaying certain words’ appearances on screen (the reference to “+1”). As the assignment required an attention to rhythm, but did not specify the necessity of using the Pulse character to create a rhythm, this student’s choice to work with the Pulse character points to both a self-guided movement beyond the requirements of the assignment, and a developing poetic sensitivity to words and texts as rhythmic bodies that effect meaning through performance. In Stepworks, rhythm can be created by working down the spreadsheet’s rows (as in the Hamilton or Unlimited Greatness pieces in Figures 2 and 3), however this rhythm is reliant on the player’s interactive choices and personal sensitivity to the poetics. Using a pulse to effect rhythm overrides the player’s choice and assigns a rhythm to the contents within a single cell, performing them on a set delay following the user’s single-click.[6] Working with the pulse, then, the student is letting their creative and critical ownership of their poetic design lead them to a direct confrontation with the culturally-conditioned paradox of user-control and freedom in interactive environments. This point is explicitly evoked later in the reflection, as the student expands on the effects of the pulse, writing:

The timing of the words appearing on the screen … also enhance the impact and significance of certain words. My favorite example is how one word … “Hallelujah,” can be stretched out to take up 8 units of time, signifying the importance of just that one word

(see Figure 4, the leftmost text in the second row).

Indeed, across my courses students have demonstrated a willingness to go beyond the specifications of this assignment in order to fully realize their poetic vision. Besides the Pulse, students often explore syntax for “sampling” the spreadsheet’s contents to perform a kind of remix, customizing fonts or colors in the display, or adding multimedia like images, gifs, or sound. Thus, as Stepworks supports this kind of work, it simultaneously supports students’ hands-on learning of digital poetics, especially those popularized by works created with Flash.

Finally, I’d like to point to one final aspect of Stepworks that makes it an ideal platform for teaching e-literature through feminist critical making pedagogies. Because of its integration with google sheets, Stepworks supports collaboration, unfettered by distance or synchronicity. Speaking for my own classes and experiences teaching with Stepworks—particularly the Spring 2020 class—this is where the program really excels pedagogically, as it opens a space for teaching e-poetry through sharing ideas and poetic decisions, creating and experimenting “together,” and supporting students to learn from and with one another, even when the shared situatedness of the classroom is inaccessible. A powerful pedagogical practice in its own right, collaboration is also a core feminist technology, central to feminist praxis across disciplines, and a cornerstone of digital humanities work, broadly speaking. Indeed, it is one of the tenants of digital humanities that has contributed to the field’s disruption and challenge to expectations of humanities scholarship.

As an illustration of what can be done in the (virtual, asynchronous) classroom through a collaborative Stepworks piece, I will end this case study with a piece my students created to close out the Spring 2020 semester—a moment that, to echo the opening of this article, threw us into a space of technological inaccess, of temporal obsolescence. Unable to access many works of Flash-based e-lit that had been on the original syllabus, critical making through Stepworks was our primary—in some cases, only—mode through which to engage with e-poetics, particularly of interaction and kinetics. Working from a single google spreadsheet, each student took a character-column and added a poem or song or lyric that was, in some way, meaningful to them in this moment. The resulting performance (Figure 4), appears as a multi-voiced, found text; watching it or playing it, it is almost as if you are in a room, surrounded by others, sharing a moment of speaking, hearing, watching, performing poetry.

Conclusion

Technological obsolescence will undoubtedly continue to present a challenge for teaching digital texts and electronic literatures. As our systems update into outdatedness, we face the loss of both readerly access to existing texts, and writerly access to creative potentialities. Flash, which as of this writing, is facing its final days, is just one example of this cycle and its effects on electronic and digital literatures. As I have shown here, however, even as platforms (like Flash) become obsolete for contemporary machines, critical making through newer platforms with low barriers to entry like Stepworks can offer productive counters to this loss, particularly from the writerly perspective of, in this case, kinetic poetics. Indeed, this approach can enhance teaching e-literature and digital textuality more broadly, for other inaccessible platforms. For instance, Twine, a free platform for creating text-based games that runs on contemporary browsers, is a productive space for teaching hypertextual storytelling in place of Storyspace, which though still functional on contemporary systems, is cost-prohibitive at nearly $150; similarly, Kate Compton’s Tracery offers an opportunity for first-time-coders to create simple text generators, in contrast to comparatively more advanced languages like javascript, which require such focus on the code, that the lesson on poetics of textual generation may be lost by overwhelmed students. Whatever the case may be, critical making through low-cost, contemporary platforms that are easy to use offer a robust space for teaching e-literary and digital media creation that maintains a feminist, inclusive, and equitable classroom ethos to counter technological obsolescence.