Introduction

Virtual reality (VR) and other extended reality (XR) technologies show great promise for supporting pedagogy in higher education. VR gives students the chance to immerse themselves in virtual worlds and engage with rich three-dimensional (3D) models of learning content, ranging from biochemical models of complex protein structures to cultural heritage sites and artifacts. Research shows that VR can increase student engagement, support the development of spatial cognitive skills, and enhance the outcomes of design-based activities in fields such as architecture and engineering. With these benefits, however, come the risks that VR will exacerbate inequality and exclusions for disabled students.[1] Disability is typically defined as a combination of physical (e.g., not having use of one’s legs) and participation (e.g., not having a ramp so that a wheelchair user can access services) barriers. According to the Center for Disease Control, 26% of adults in the United States have a disability. These include cognitive, mobility, hearing, visual, and other types of disability.

As a class of technologies that engage multiple senses, VR has the capacity to engage users’ bodies and senses in a holistic, immersive experience. This suggests that VR holds great potential for supporting users with a diverse range of sensory, motor, or cognitive capabilities; however, there is no guarantee that the affordances of VR will be deployed in accessible ways. In fact, the cultural tendency to ignore disability coupled with the rapid pace of technological innovation have led to VR programs that exclude a variety of users. Within higher education, the exclusion of disabled students from the benefits of these new technologies being deployed risks leaving behind a significant portion of the student population. The U.S. Department of Education, National Center for Education Statistics (2019) has found that 19.4% of undergraduates and 11.9% of graduate students have some form of disability. Libraries have long been leaders in supporting accessibility (Jaeger 2018) and the rise of immersive technologies presents an opportunity for them to continue to be leaders in making information available to all users. Academic libraries, the focus of this paper, are particularly well positioned to address the challenges of VR accessibility given their leadership in innovative information services and existing close relationships with the research and pedagogy communities at their institutions.

In what follows, we present a brief outline of the recent emergence of VR technologies in academic libraries, introduce recent research on VR accessibility, and conclude with a discussion of two brief case studies drawn from the authors’ institutions that illustrate the benefits and barriers associated with implementing accessibility programs for VR in academic libraries.

VR in Higher Education

“Virtual reality” or “VR” refers to a class of technologies that enable interactive and immersive experiences of computer-generated worlds, produced through a mixture of visual, auditory, haptic, and/or olfactory stimuli that engage with the human sensory system and provide the user with an experience of being present in a virtual world. In most VR systems, visual and auditory senses are primarily engaged, with increasing research being done on integrating haptics and other stimuli. Different levels of immersion and interaction are possible depending on the specific configuration of devices, from relatively low immersion and low interaction provided by inexpensive 3D cardboard viewers for use with mobile devices (e.g., Google Cardboard) to expensive head-mounted displays (HMDs) such as the HTC Vive and Oculus Rift systems that use headsets and head and body tracking sensors to capture users’ movements along “six degrees of freedom” (three dimensions of translational movement along x, y, and z axes, plus three dimensions of rotational movement, roll, pitch, and yaw). At present, HMDs are more commonly used than CAVEs, or “Cave Automatic Virtual Environment,” room-sized VR environments that use 3D video projectors, head and body tracking, and 3D glasses to provide multi-user VR experiences (Cruz-Neira et al. 1992), which have been used in academic contexts since the 1990s. This interest in new information technologies that provide library users with access to computer-generated worlds is not new for librarians. The current interest in VR follows experimentation conducted in libraries beginning earlier in the 2000s on “virtual worlds,” 3D computer-generated social spaces, such as Second Life, that users interacted with through a typical configuration of 2D computer monitor, mouse, and keyboard. Libraries envisioned these technologies as potential tools for expanding library services and enhancing support for student learning and research evaluated the pedagogical efficacy of these new tools (e.g., Bronack et al. 2008; Carr, Oliver, and Burn 2010; Deutschmann, Panichi, and Molka-Danielsen 2009; Holmberg and Huvila 2008; Praslova, Sourin, and Sourina 2006).

Since the commercial release of affordable VR systems such as the HTC Vive and Oculus Rift in 2016 (and now cheaper, lower-resolution variants such as Oculus Go and Oculus Quest), academic libraries have started seriously exploring the possibility of VR to support research and pedagogy. They have begun to conceptualize VR as a platform for immersive user engagement with high-resolution 3D models that support existing curricular activities, such as the use of archaeological, architectural, or scientific models in classroom exercises. Cook and Lischer-Katz (2019) argue

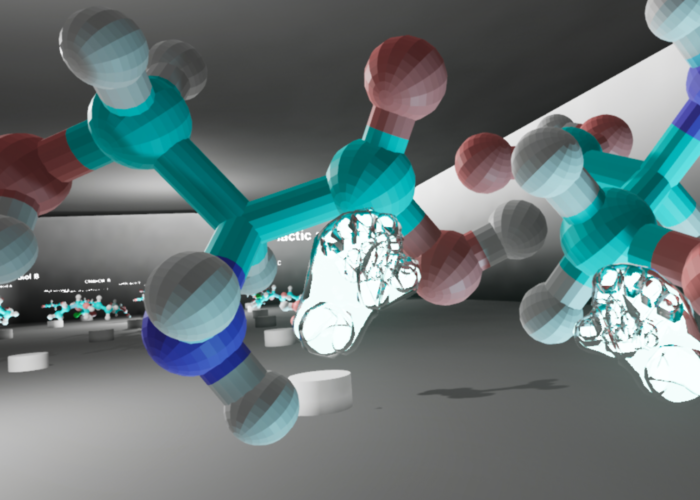

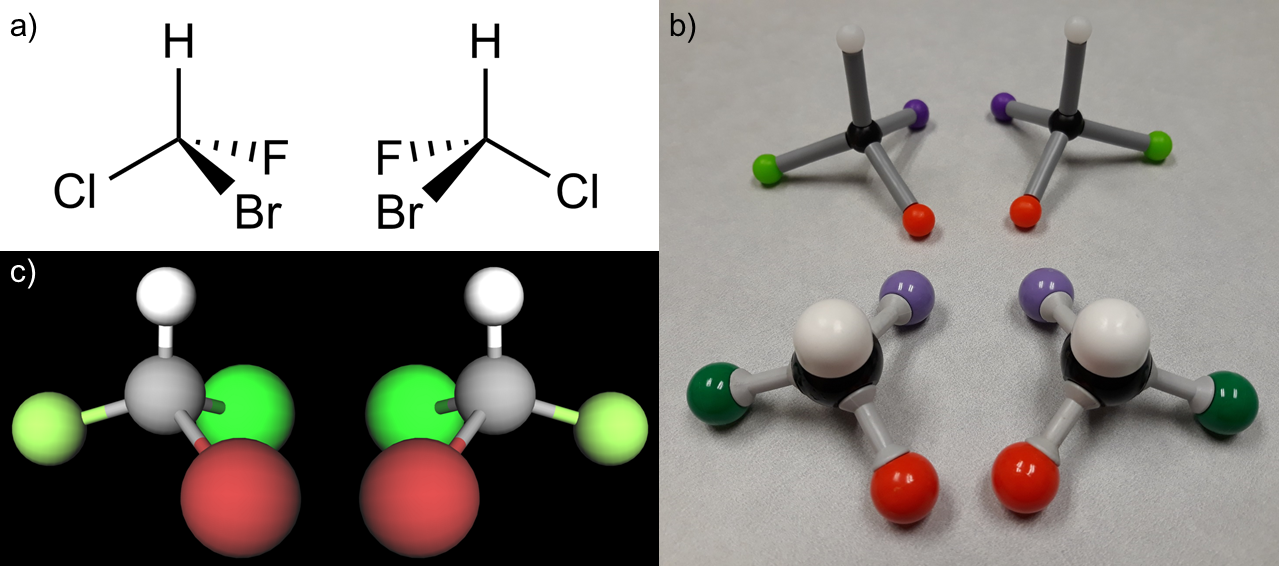

the realistic nature of immersive virtual reality learning environments supports scholarship in new ways that are impossible with traditional two-dimensional displays (e.g., textbook illustrations, computer screens, etc.). … Virtual reality succeeds (or fails), then, insofar as it places the user in a learning environment within which the object of study can be analyzed as if that object were physically present and fully interactive in the user’s near visual field. (70)

VR has been used to support student learning in a variety of fields, such as anthropology and biochemistry (Lischer-Katz, Cook, and Boulden 2018), architecture (Milovanovic 2017; Pober and Cook, 2016; Schneider et al. 2013), and anatomy (Jang et al. 2017). Patterson et al. (2019) describe how the librarians at the University of Utah have been incorporating VR technologies into a wide variety of classes, supporting architecture students, geography students, dental students, fine arts students, and nursing students. From this perspective, VR is envisioned as a tool for accessing digital proxies of physical artifacts or locations that students would ordinarily engage with as physical models (for instance, casts of hominid skull specimens), artifacts, or locations, but which are often too expensive or difficult to access directly.

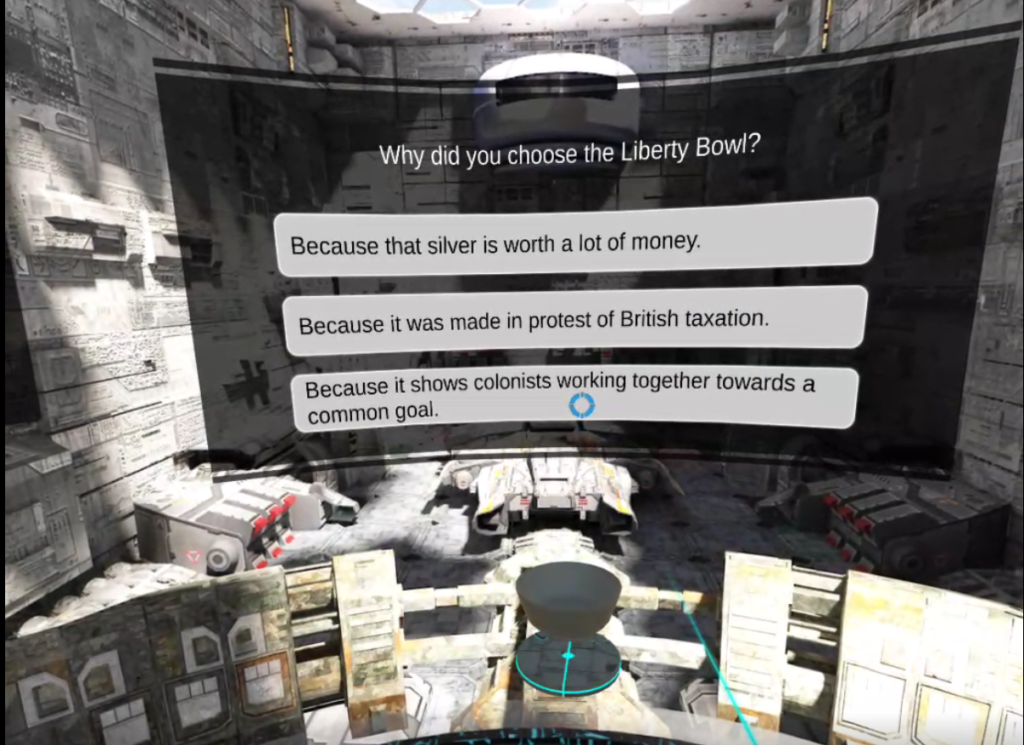

In addition to providing enhanced modes of access to learning materials, using VR can also enhance student engagement and self-efficacy if implemented in close consultation with faculty (Lischer-Katz, Cook, and Boulden 2018). The technical affordances of VR, when deployed with care, are able to support a range of pedagogical objectives. Dalgarno and Lee (2010) identified representational fidelity (i.e., realistic display of objects, realistic motion, etc.) and learner interaction (i.e., student interaction with educational content) as key affordances of VR technologies, which they suggest can support a range of learning benefits they identified, including spatial knowledge representation, experiential learning, engagement, contextual learning, and collaborative learning. Chavez and Bayona (2018) surveyed the research on literature on VR and identified interaction and immersion as the two aspects of VR that should be considered when designing VR learning applications. Similarly, Johnson-Glenberg (2018) identified a set of design principles for using VR in education based on related affordances of VR—“the sense of presence and the embodied affordances of gesture and manipulation in the third dimension” (1) and found that “active and embodied learning in mediated educational environments results in significantly higher learning gains” (9). Research also suggests that the special visual aspects of VR, such as depth perception and motion cues (Ware and Mitchell 2005), head tracking (Ragan et al. 2013), and immersive displays (Ni, Bowman and Chen 2006) are able to enhance the analytic capabilities of human perception. VR has been shown to enhance human abilities of visual pattern-recognition and decision-making, particularly when working with big data (Donalek et al. 2014), prototyping (Abhishek, Vance, and Oliver 2011), or understanding complex spatial relationships and structures in data sets (Prabhat et al. 2008; Kersten-Oertel, Chen and Collins 2014; Laha, Bowman and Socha 2014).

Immersion is often identified by researchers as a key characteristic of VR technologies that is applicable to enhancing the learning experiences of students. Fowler (2015) identified three types of VR immersion relevant to pedagogy: Conceptual immersion, which supports development of abstract knowledge through students’ self-directed exploration of learning materials, for instance, molecular models; task immersion, in which students begin to engage with and manipulate learning materials; and social immersion, in which students engage in dialogue with others to test and expand upon their understanding. One critique of the applications of VR-based pedagogy is that instructional designers and instructors rarely indicate their underlying learning models or theories (Johnston et al. 2018). For instance, Lund and Wang (2019) found that VR can improve student engagement in library instruction, but do not specify which pedagogical models are effective, instead comparing a particular classroom activity with traditional classroom methods versus the same activity using VR, measuring impact on academic performance and motivation. Radianti et al. (2020), in their review of 38 recent empirical studies on VR pedagogy, acknowledge that while immersion is a critical component of the pedagogical affordances of VR, different studies define the term differently. They also found that only 32% of the studies reviewed indicated which learning theories or models underpin research studies, which makes it difficult to generalize approaches and apply them to other contexts. Radianti et al. (2020) point out that “in some domains such as engineering and computer science, certain VR applications have been used on a regular basis to teach certain skills, especially those that require declarative knowledge and procedural–practical knowledge. However, in most domains, VR is still experimental and its usage is not systematic or based on best practices” (26).

What these trends suggest is that VR shows great potential for use in supporting classroom instruction in higher education institutions, even though pedagogical models and methods of evaluation are still being developed and most projects are in the experimental phase of development. Some fields have already been adopting VR into their departments, such as computer science, engineering, and health science programs, but academic libraries are leading the way in promoting VR for their wider campus communities (Cook and Lischer-Katz 2019). Since many libraries are emerging as leaders in supporting VR, it is essential for them to have policies and support services in place to ensure that these new technologies are usable by all potential users at their institution.

As librarians consider adopting these innovative technologies, discourses of innovation can sometimes lead to oversights that may exclude some users. VR technologies enter libraries alongside other emerging technologies and innovative library services. The current discourse of transformational change promoted by the corporate information technology sector are often at odds with critical approaches to librarianship that stress inclusion and social justice (Nicholson 2015). These conceptions of radical innovation and disruption construct institutions, their policies, and regulations as structures that only function to slow down and constrain innovation. The assumption is that innovative technology is inherently neutral in terms of its ethics and politics, and that it does not require institutional processes to constrain or limit its negative effects; however, by decoupling technological change from institutionalized processes that protect the rights of historically marginalized groups of library patrons, technological change inevitably reinscribes exclusion into the infrastructures of learning. As Mirza and Seale (2017) argue

technocratic visions of the future of libraries aspire to a world outside of politics and ideology, to the unmarked space of white masculinity, but such visions are embedded in multiple layers and axes of privilege. They elide the fact that technology is not benevolently impartial but is subject to the same inequities inherent to the social world. (187)

The idea that technologies embed biases and cultural assumptions is not a new idea—scholars in the field of Science and Technology Studies have argued for decades that technologies are never neutral (e.g., Winner 1986)—but librarians, library administrators, and library science researchers often forget to examine their own “tunnel vision and blind spots” (Wiegand, 1999), or more precisely, their unreflected implicit biases that shape decision making about which technologies to adapt and how to deploy them in libraries. On the other hand, this also means that it is possible to balance innovation with inclusivity by foregrounding library values at the start of the process of innovation, rather than by retrofitting designs, which can yield results that are less equitable and more costly (Wentz, Jaeger and Lazar 2011). Clearly, the learning affordances of VR (Dalgarno and Lee 2010), as they are currently designed, need to be reimagined for disabled users.

VR and Accessibility

Aside from these ethical considerations, as VR becomes increasingly common in education, business, and other disciplines, it becomes answerable to legal guidelines. Federal guidelines for more established information and communication technology can be found in Section 508 of the Rehab Act (see U.S. General Services Administration n.d.), which utilizes Web Content Accessibility Guidelines (WCAG) 2.0 as a standard for web technology (W3C Web Accessibility Initiative 2019). WCAG provide guidance on how to make web content accessible to disabled people and they are overseen by the Web Accessibility Initiative (WAI), part of the World Wide Web Consortium (W3C) (see W3C Web Accessibility Initiative 2019). While they provide a valuable framework, WCAG do not directly apply to immersive technologies and there are currently no accessibility guidelines that do so. Work has been done to develop individual accessibility extensions, hardware, and features, but measurable guidelines that would aid in accessible design are still needed. Only in the last few years have accessibility specialists started adapting existing guidelines by examining existing initiatives and mapping them to the success criteria in WCAG. This includes the XR Access Symposium that was held in the summer of 2019 (see Azenkot, Goldberg, Taft, and Soloway 2019), as well as W3C’s Inclusive Design for Immersive Web Standards Workshop held in the fall of 2019 (see W3C 2019). There are also more specific guidelines that can contribute to design considerations, such as the Game Accessibility Guidelines that are more focused on game design (see Ellis et al. n.d.). Increasing the urgency of this matter, as of December 31, 2018, any video game communication functionality released in 2019 or later must be accessible to disabled people under the 21st Century Communications and Video Accessibility Act (Enamorado 2019), which expands the group of industries mandated to meet accessibility guidelines to include the video game industry.

Those interested in learning more about the accessible design of VR and other immersive technologies should consider reading “Accessible by Design: An Opportunity for Virtual Reality” (Mott et al. 2019), which provides general guidelines for designing accessible VR. For an example of designing accessible tools for a specific user group, see Zhao et al. (2019), which details the developments of a VR toolkit for supporting low-vision users.

Before going any further, it is important to distinguish between VR in its current, popularized form vs. the affordances of VR as a medium. The initiatives, guidelines, and research projects referred to in this section are still largely focused on analyzing the design of the former. However, in order for the technology to become truly accessible, critical inquiry must continue to progress in its understanding of the broader capabilities, limitations, and levels of interaction that construct the latter. The design practices and recommendations that have been developed to support the accessibility of VR are largely individualized and prototypical, which means that each institution’s particular experiences tackling the challenges of accessible VR will vary based on a number of factors. These factors include their individual histories supporting VR, staffing levels and development support, resources, and institutional commitments to accessibility. As librarians at Temple University and University of Oklahoma, we are now in the process of developing guidelines and tools to meet these challenges.

VR at Temple University’s Loretta C. Duckworth Scholars Studio

Temple University’s Loretta C. Duckworth Scholars Studio (LCDSS) “serves as a space for student and faculty consultations, workshops, and collaborative research in digital humanities, digital arts, cultural analytics, and critical making” (Temple University Libraries n.d.). Before the main library’s relocation to its new building, the LCDSS, formerly known as the Digital Scholarship Center (DSC), was located in the basement of Paley Library. Upon its 2015 opening, the DSC had two Oculus Rift DK2 headsets available for interested users. Its space in the new Charles Library includes an Immersive Visualization Studio designed for up to 10 people to simultaneously participate in immersive experiences, and as of 2019 has twelve headsets from a variety of manufacturers, in addition to mobile based headsets with an eye towards continuous acquisition of newer technologies. There are six full-time staff members, one of whom is responsible for the upkeep and management of the Immersive Studio among their other duties.

In August of 2017, I (Jasmine Clark) began researching the accessibility of VR as part of a project I was developing during my library residency.[2] Upon reviewing existing literature, it was apparent that research on the usability of VR for disabled users was in its early stages. Most notable was a report, “VR Accessibility: Survey for People with Disabilities,” resulting from a survey of disabled VR users produced in partnership by ILMxLab and the Disability Visibility Project (see Wong, Gillis, and Peck 2018). However, the majority of research and resources exploring the applications of VR to disabled people were composed of one-off solutions and extensions. This included cases of VR being used as an assistive technology (e.g., spatial training for blind individuals), unique hardware solutions (e.g., the haptic cane), and known issues for specific types of users (e.g., assumed standing position in games being disorienting for wheelchair users). These developments, while valuable, were not design standards or solutions broadly adopted by the game industry. Another concern was the fact that, in the context of the DSC, VR was not just a technology, but also a service that included training and assistance in its use for library patrons. This added an additional layer of complexity because, while there have been discussions on disability in the context of making and makerspaces, there was no literature on accessible service policies, best practices, and documentation for digital scholarship as a whole. In response to these challenges, I began examining existing guidelines and assessing their applicability to emerging technologies. Because WCAG is the federal standard, I joined a working group that guided me through reading the supporting documents and success criteria of WCAG, as well as examining the major legislative changes that were happening around accessibility at that time. I also began working with Jordan Hample, the DSC’s (now LCDSS’s) main technical support staff member, to understand whether or not these guidelines were applicable to immersive technologies.

Because we also needed to address service practices and policies, I decided that user testing would be necessary. User testing would consist of three phases that would take place during a single visit: a pre-interview (to ensure safety and gain an understanding of a user’s disability and previous technical experience), a use test (where users would use VR headsets), and a post-interview (to solicit feedback). I coordinated with Temple’s Disability Resources and Services (DRS) and DSC staff to bring in disabled stakeholders (students, alumni, and other members of the Temple community) in an attempt to 1) determine whether or not they would be able to utilize the equipment, and 2) determine if there were barriers to providing them with the same level of service as other patrons. As Wong, Gillis, and Peck (2018) point out in their report, “people with disabilities are not a monolith—accessibility and inclusion is different for everyone” (1). In order to scope the research to a manageable scale, I decided we would begin with visually impaired, deaf/Hard-of-Hearing (HOH), and hearing impaired users (hearing impairment would include individuals with tinnitus, or other auditory conditions not included under the umbrella of deaf/HOH). Working with Jordan, as well as Alex Wermer-Colan, a Council on Library and Information Resources (CLIR) postdoctoral fellow, I proceeded to draft a research protocol that consisted of interview questions and an explanation for participants of what VR is and the purpose of the research being conducted. These were all sent out via DRS listservs to solicit participants. VR services in the DSC involved a lot of hands-on onboarding and orientation from staff. Often, patrons would drop in and simply want to get acquainted with the technology. As a result, the goal of the research project was for disabled participants in our user testing to be able to navigate to our space and successfully work with the staff members responsible for providing VR assistance to identify experiences that would be as usable as possible for them. There was also a need to better understand staff preparedness in providing assistance to disabled patrons. In the months leading up to the testing, I had preliminary discussions with staff, and also inquired into staff training on accessibility and disability more generally at the library and university level. I found that training was not formalized, so I gathered and shared resources with my colleagues to ensure the safety and dignity of participants. This included referring to the Gallaudet University’s guide on working with American Sign Language (ASL) interpreters (see Laurent Clerc National Deaf Education Center 2015) and various video tutorials on acting as a sighted guide for blind/low-vision people, and maintaining active discussions and explanations around ableism and disability. The discussions also allowed for better understanding of gaps in training and norms.

Once staff were sufficiently prepared, user testing commenced in the summer of 2018. Four participants were invited to the center, three of whom had various visual impairments and one of whom was deaf. On the days of their visits, I would go to the library entrance to greet and guide anyone who needed assistance. Upon arrival, they were brought into a meeting room for a pre-interview that would reintroduce the purpose of user testing, gauge any previous experience with the technology, and identify safety concerns by asking if they had other sensitivities that they felt would be a problem in VR (e.g., sensory sensitivities, sensitivity to flashing lights, etc.). We also asked about level of hearing/vision to get a better idea of which types of experiences worked for different types of hearing/vision. Some immediate questions brought up by participants were around accuracy of sound, depth perception, and similarity to real-world visual experience. Once the initial interview was completed, they were guided out to work with Jordan to identify potential experiences, similar to the way he typically worked with students. I took notes on the interactions, and Alex assisted as needed. Alex’s presence became particularly important when it came to the deaf user. It was brought to our attention that 1) due to variations in inner ear formation, those who were deaf/HOH were at higher risk for vertigo and, 2) a user reliant upon an ASL interpreter would not be able to see the interpreter while in the headset, complicating human assistance. In response, Alex took on the role of surrogate for this participant while they watched his activity on a monitor and gave instructions and feedback. Jordan took on the role of listening to the participants’ verbal feedback on each experience and, utilizing his knowledge of the DSC’s licenses for different VR programs, selected experiences that would be more accommodating to their specific hearing/visual needs.

Upon completion of this phase, participants were then brought back into the meeting room for a post interview. Responses to both interviews, as well as observations made during the interactions, were compiled and summarized into an internal report for our team. We had initially planned to have more users come in, but found that feedback on the limitations of the technology was consistent and addressable enough for us to make adjustments that would allow us to improve services and collect more nuanced data moving forward. For example, it was clear that the software varied so drastically that, in order to provide safe and effective services, it would be necessary to index the features and capabilities of various VR experiences.

The timing of this work was crucial, as we were a year away from the move to our new space, and the findings from the study helped us plan for it. The LCDSS is significantly larger than the DSC, and much more visible. However, while it has required that we re-envision our service policies and programming, it has also given us the opportunity to integrate accessibility into our work from the beginning. One way we are doing this is by developing an auditing workflow that would allow any staff member or student worker to examine newly-licensed VR experiences and produce an accessibility report, as there is a glaring lack of Voluntary Product Accessibility Templates (VPAT) for VR products. These reports would detail accessibility concerns and limitations at the beginning, allowing us to better serve disabled patrons. We are also working with the university’s central Information Technology Services to look at how this can be incorporated into broader LCDSS purchasing practices and documentation workflows.

Once this workflow is finalized, it will be used to support LCDSS staff in aiding faculty and researchers in the development of Equally Effective Alternative Access Plans (EEAAP) for their research and teaching. An EEAAP documents how a technology will be used in a class or program, its accessibility barriers, the plan to ensure equitable participation for disabled people, and the parties responsible for ensuring the plan is carried out. LCDSS staff frequently consult with faculty who wish to integrate LCDSS resources into their pedagogical practices. This can include feedback on assignment structure and design, recommended technologies, and other vital information required for pedagogical efficacy. By generating accessibility reports that identify technical limitations, LCDSS staff can aid faculty in developing multimodal approaches to integrating these technologies into their teaching. This means that, not only are we bringing accessibility to their attention early, but that we are also able to guide them and reduce intimidation, making buy-in more successful. Moving forward, Jordan Hample and I will be making all materials involved in this workflow publicly available, as well as continuing and expanding user testing to include other disabilities.

VR at the University of Oklahoma Libraries, Emerging Technologies Program

Accessibility initiatives for VR at the University of Oklahoma have followed a slightly different trajectory than the one outlined by Jasmine in the previous section. The VR program at OU Libraries was officially launched in 2016 in the Innovation @ the EDGE Makerspace, which began hosting classes and integrating VR content into the course curriculum, including initial integrations within biology, architecture, and fine arts courses (Cook and Lischer-Katz 2019). We use custom-built VR software that enables users “to manipulate their 3D content, modify environmental conditions (such as lighting), annotate 3D models, and take accurate measurements, side-by-side with other students or instructors” and support networked, multiuser VR sessions, which forms “a distributed virtual classroom in which faculty and students in different campus locations [are able to] teach and collaborate” (Cook and Lischer-Katz 2019, 73). Librarians provide VR learning opportunities in three main ways: 1) deployment in the library-managed makerspace; 2) facilitated course integrations; 3) special VR events. Each approach requires different levels of support and planning from librarians. In the case of deployment in our makerspaces, students are able to learn about the technology in a self-directed manner, with guidance from trained student workers who staff the space. Workshops and orientation sessions are available, and students, faculty, and community members typically drop in when they want and engage with technology in a self-directed manner. Since the focus of this space is on self-directed learning and experimentation, the training of student support staff is essential for ensuring that the space feels welcoming and inclusive to visitors and that staff are able to adjust the level of support they provide based on the needs of the visitors to the space.

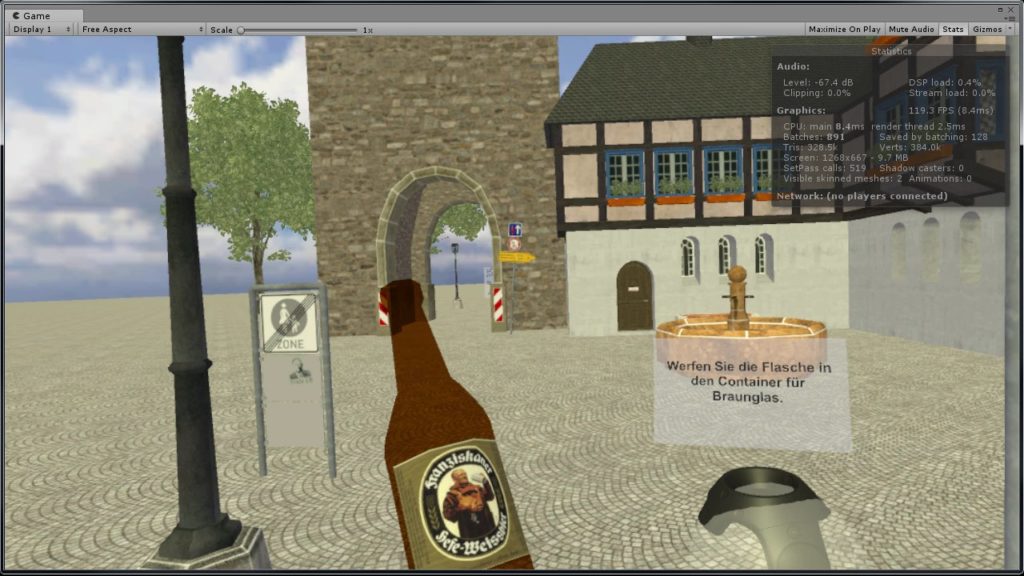

In the case of course integrations, students are typically brought to our makerspace during regularly scheduled class time. We have portable VR kits that use high-powered gaming laptops and Oculus Rift headsets, which makes it possible to bring the learning experiences directly into the classroom if the faculty member prefers. Examples of VR-based classroom activities include interacting with 3D models that simulate learning objects, such as examining the morphology of different hominid skull casts in an anthropology class or analyzing complex protein structures and processes in a biochemistry class. VR is also used in other classes as a creative tool, such as in a sculpture course in which the students created sculptures in VR and then printed them using the 3D printers in the makerspace. In planning VR course integrations, librarians work directly with faculty members to design activities that will support their course learning objectives.

VR is also used frequently at OU Libraries for special events in which experts lead participants on guided tours through scholarly, high-resolution 3D models. Participants can join the VR tour on campus or from other institutions, since our custom-built VR software supports networked, multi-user sessions. Examples include inviting an archaeologist to lead a group through a 3D scan of a cave filled with ancient rock carvings that is located in the Southwestern United States (Schaffhauser 2017), as well as a tour led by a professor of Middle Eastern History through a 3D model of the Arches of Palmyra, located in Syria.

From the start of the emerging technologies initiative at OU Libraries, rapid innovation was a guiding principle, with the hope that the benefits of emerging technologies could be demonstrated to the broader campus community and that the library could become a hub for supporting emerging technologies across campus. It was important to quickly develop a base of VR technologies and librarian skills in order to promote the potential benefits of the technologies to faculty and students across campus. Starting in January 2016, students and faculty began using our VR spaces for research, learning, experimentation, and entertainment, and by 2018 we had faculty from over 15 different academic departments across campus using VR as a component in their classes (Cook and Lischer-Katz 2019), along with over 2000 individual uses of our VR workstations. By 2019, the emerging technology librarians (ETL) unit had grown to five full-time staff members who worked together to “rapidly prototype and deploy educational technology for the benefit of a range of University stakeholders” (Cook and Van der Veer Martens 2019, 614). At this time, concerns were raised by one of our ETLs about the accessibility of existing VR services and the ETL team brought in an accessibility specialist to advise them. One of the key challenges the team identified through the process of reviewing their existing VR capabilities was the fact that most commercially produced VR software lacks accessibility options, particularly in terms of compatibility with assistive devices. In reviewing users’ experiences in our makerspace, ETLs found that users with dexterity, coordination, or mobility disabilities often request passive VR experiences that provide immersive experiences without the need for use of the VR controller inputs. For programs such as the popular Google Earth VR program, it is not currently possible to provide users with passive experiences, rather the user needs to be able to actively control the two VR controllers themselves to engage with the VR experience. To the team’s surprise, some of the lower-resolution, untethered VR systems, such as the Oculus Go have shown more capabilities for providing passive experiences that rely only on head tracking and the use of target circles for movement through the VR space. Making narrated and guided tours for a VR experience available is essential for providing access to some groups of disabled users. Ensuring that VR controllers are accessible has also been a challenge and ETLs have begun experimenting with 3D printing add-on components to make the VR controllers more usable for users with limited hand function. In response to the lack of accessibility options for commercial software releases, modifications were made to OU’s custom-built VR software to provide accessibility capabilities, including: 1) controls for changing the sensitivity of VR interface controls; and 2) options for user interface text resizing. These modest modifications were made in consultation with VR users. Technical solutions alone are not sufficient, of course, and the ETL team has also found it very important to continue to improve training for student staff so that they are prepared to properly assist disabled users in a sensitive and respectful way. Communicating clearly to the wider university community about what accessible software and hardware capabilities are available is also a challenge that the team is tackling. These activities are still ad hoc in many ways, and we have found that additional work is needed to develop procedures for addressing VR accessibility in a more systematic way in the library and across campus.

The ETL team is taking several approaches to improving our support for accessible VR, looking outward to resources beyond the walls of OU Libraries and looking inward to resources at the university to support improvements to accessibility. ETLs are expanding their knowledge base through involvement in accessibility conferences and working groups and looking to our colleagues at other institutions, such as Temple University Libraries, for guidance on policies and procedures for evaluating and implementing VR software and hardware. The ETL team is planning on conducting future usability testing and focus groups with a range of disabled users from the OU community in order to further refine the feature set of our custom software, which we plan to package and distribute for other institutions to use and build upon.

The experiences of ETLs at OU Libraries point to the importance of working with accessibility experts and bringing disabled users into the design process to develop technologies and policies. Librarians should not be expected to take on accessible design by themselves, rather they should look to experts in this field for assistance. Working with our University’s disability coordinator has been essential for helping us to identify areas where we need to improve our accessibility capabilities, as well as providing us with a network of disabled users on campus who could provide us with user feedback on our technologies. The types of issues we are looking into include techniques for auditing VR software for accessibility issues, providing clearer signage and information on websites to provide students and faculty with a clear understanding of which emerging technology tools are accessible and what accommodations are possible, and ways in which we can continue to improve staff training so that the student workers who staff our makerspace can better support disabled users. The process of developing policies and establishing processes and documentation to support those policies does take time; however, this work has been essential for training staff and establishing best practices at our makerspace in order to address the challenges of VR accessibility. Additional work is necessary to codify this ongoing and still experimental work into institutional policy documents and continue to seek out adaptive tools to make VR accessible for a greater range of library patrons.

Conclusion

The current wave of immersive technologies was not initially designed for users with varying levels of visual, auditory, mobility, and neurological capabilities. Even for libraries and centers that do have development support there is no way to remediate the inaccessibility of every experience used and, even if there was, there would be no way to keep up with the regular updates of hardware and software. One-off, localized solutions cannot replace structural change. In order for VR to become an accessible medium, developers, hardware manufacturers, distribution platforms, and other stakeholders involved in its creation and distribution need to ensure accessibility within their respective roles. The current lack of support from these stakeholders makes it crucial that library staff and the educators that they support understand disability and accessibility, develop appropriate documentation, and advocate for software and hardware vendors to provide better accessibility support in their products. In the meantime, libraries supporting different tiers of VR use and investment will have to consider different approaches to accessibility.

The preceding examples drawn from our experiences at Temple University and the University of Oklahoma (OU) show the range of issues facing accessible VR, but also show the differences in approach for different service models and pedagogical objectives. Temple University includes VR in a very broad suite of technical offerings and its faculty are not currently at the phase of “buy-in” where regular VR development is a priority. As a result, Temple’s focus is on indexing experiences and integrating alternative access plans, with accessible development occurring on a smaller scale. In comparison, OU has much more of a demand for custom-developed software solutions. This demand is due to the fact that one of the main VR applications that OU promotes for course integrations is its own flexible, custom software, which supports a variety of disciplines, including courses in biochemistry, anthropology, architecture, and English. OU is beginning to investigate the accessibility challenges of working with commercial software and is looking to Temple for guidance on how to properly evaluate different software titles and provide adequate documentation. For libraries without developer support, we can expect that the focus will more likely follow Temple’s approach. For libraries with regular development efforts, supporting home-grown accessible design practices, such as those at OU, will be more of a central activity. Some libraries will be a mixture of the two, working to blend commercial and homegrown solutions. Regardless of a library’s approach, the major takeaways for other institutions to consider as they bring accessibility thinking into their VR programs include:

- Plan for Accessibility from the Beginning: Libraries can save time and resources by thinking about accessibility issues at the start of a program or project.

- Lack of Standards: As of 2020, there are no standards for accessible VR design, but there are related standards that could lay the groundwork for their development.

- Developer Support is Essential: Libraries that intend to develop VR experiences need to have sufficient developer support with accessibility expertise.

- Importance of Auditing and Reporting: Out-of-the-box VR experiences will pose different accessibility challenges from one person to the next and should be audited to better understand these barriers to access. If a library lacks a developer to modify software or create new software, at the very least, available software needs to be audited and have a corresponding accessibility report produced.

- VR is Not the Pedagogy: VR should be another tool in an educator’s arsenal, not the sole focus of a class (unless VR is the course subject). As Fabris et al. (2019) suggest “Having VR for the sake of having VR won’t fly; the VR learning resources need to be built with learning outcomes in mind and the appropriate scaffolds in place to support the learning experience” (74).

- Acknowledge the Limits of VR Accessibility: There are limits to making VR accessible. The reality is that there will be students who are unable to use VR for a variety of reasons. Therefore, there should always be an alternative access plan developed so that students have access to non-VR learning methods as well.

Considering these best practices will better enable libraries to approach the challenges of making VR accessible. Putting them into action will directly benefit disabled users, improve librarians’ abilities to make their innovative technology spaces more inclusive, and will help administrators to better plan and allocate resources for supporting the missions of their institutions. While these guidelines are focused on supporting academic libraries, they will likely benefit higher education applications outside of the library, too.

Additionally, while it is true that there is extensive work to be done, there are existing inclusive instructional approaches that can be integrated into VR based coursework by individuals. Multimodal course design and Universal Design for Learning (http://udloncampus.cast.org/page/udl_about) are frameworks that can be applied to VR coursework with approaches like collaborative assignments and activities. It is also worth reviewing a 2015 special issue of Journal of Interactive Technology and Pedagogy that considers the benefits of introducing perspectives from disability studies into the context of designing innovative pedagogies. One of the important takeaways from this collection is that embracing disability and the alternative perspectives that it can provide, presents the potential for new learning opportunities (Lucchesi 2015).

Regardless of whichever pedagogical approach educators adopt, it is imperative that, unless VR is the subject of the course, they remember it is not the pedagogy. Instead, faculty should keep a diverse array of tools in their pedagogical toolkit that will support an equally diverse set of learners. As librarians, faculty, and instructional designers become familiar with inclusive learning frameworks, they are better positioned for more targeted, meaningful advocacy within their institutions. Because, while it is true that there is a lot of work to be done, it is equally true that it can only be done together through active involvement in institutional committees and task forces and by ensuring that discussions about accessibility occur in strategic planning and budgeting meetings with administrators. Accessibility awareness needs to be raised throughout libraries and other academic institutions so that the accessibility challenges of emerging technologies are addressed at the design stage and built into pedagogical implementations from the beginning. This will help to ensure that pedagogies founded on emerging technologies will be “born accessible,” for the benefit of learners and educators throughout the academic world.